cauc-cs大数据:Flume采集日志信息到HDFS中

cauc-cs大数据:Flume采集日志信息到HDFS中

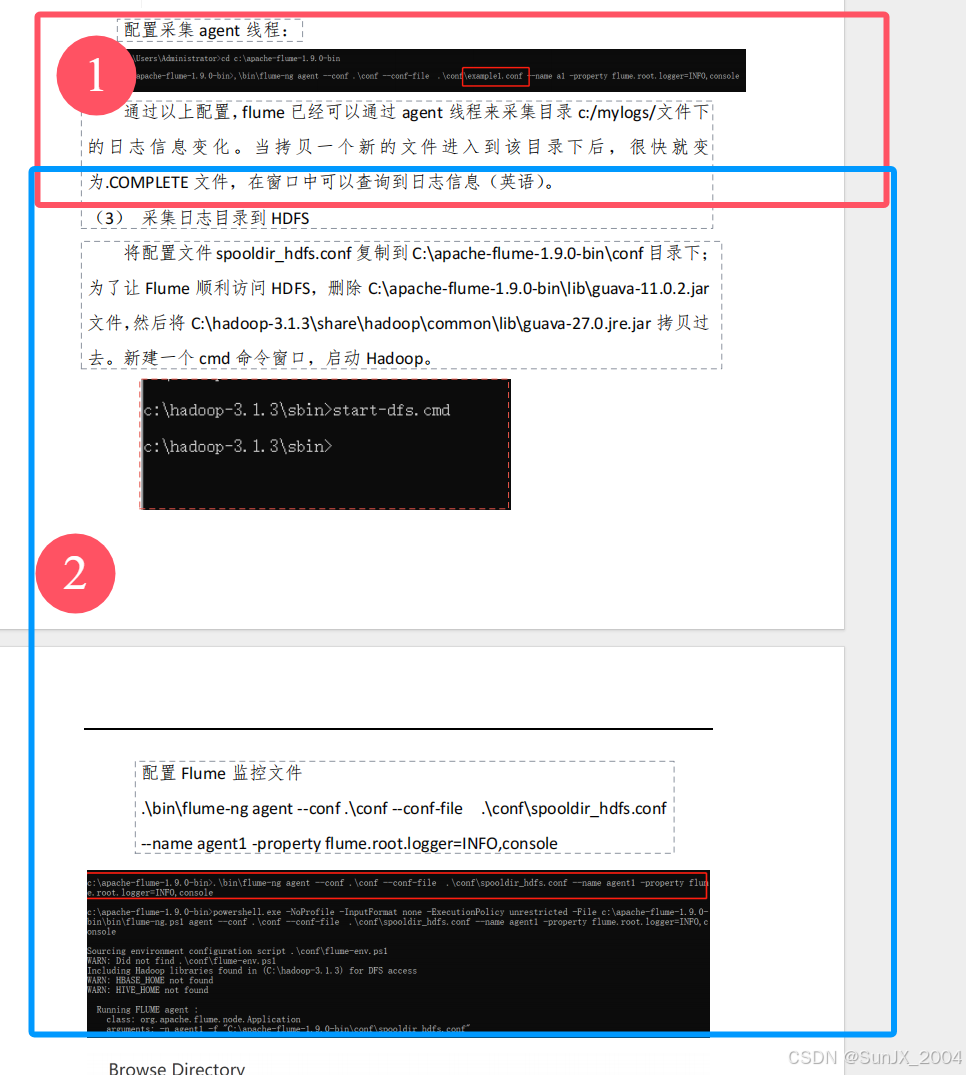

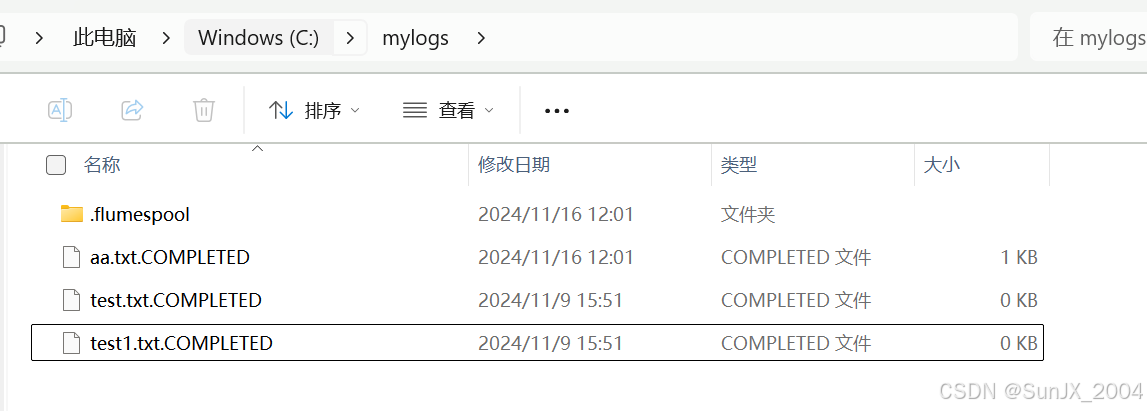

研究了半天老师的PDF,发现无论如何都无法将Flume消费后的数据采集到HDFS中(如图,文件被Flume消费,后缀名已被改)。经过一番探寻发现①和②虽为学习流程上的递进关系,但②不应该在①的基础上做。

Running FLUME agent :

class: org.apache.flume.node.Application

arguments: -n a1 -f "C:\apache-flume-1.9.0-bin\conf\example1.conf"

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/C:/apache-flume-1.9.0-bin/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/C:/hadoop-3.1.3/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2024-11-16 11:19:10,718 (lifecycleSupervisor-1-0) [INFO - org.apache.flume.node.PollingPropertiesFileConfigurationProvider.start(PollingPropertiesFileConfigurationProvider.java:62)] Configuration provider starting

2024-11-16 11:19:10,721 (conf-file-poller-0) [INFO - org.apache.flume.node.PollingPropertiesFileConfigurationProvider$FileWatcherRunnable.run(PollingPropertiesFileConfigurationProvider.java:138)] Reloading configuration file:C:\apache-flume-1.9.0-bin\conf\example1.conf

2024-11-16 11:19:10,725 (conf-file-poller-0) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:1203)] Processing:c1

2024-11-16 11:19:10,725 (conf-file-poller-0) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:1203)] Processing:c1

2024-11-16 11:19:10,726 (conf-file-poller-0) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:1203)] Processing:r1

2024-11-16 11:19:10,726 (conf-file-poller-0) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addProperty(FlumeConfiguration.java:1117)] Added sinks: k1 Agent: a1

2024-11-16 11:19:10,726 (conf-file-poller-0) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:1203)] Processing:k1

2024-11-16 11:19:10,726 (conf-file-poller-0) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:1203)] Processing:c1

2024-11-16 11:19:10,726 (conf-file-poller-0) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:1203)] Processing:r1

2024-11-16 11:19:10,726 (conf-file-poller-0) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:1203)] Processing:r1

2024-11-16 11:19:10,726 (conf-file-poller-0) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:1203)] Processing:k1

2024-11-16 11:19:10,726 (conf-file-poller-0) [WARN - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.validateConfigFilterSet(FlumeConfiguration.java:623)] Agent configuration for 'a1' has no configfilters.

2024-11-16 11:19:10,735 (conf-file-poller-0) [WARN - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.validateSinks(FlumeConfiguration.java:884)] Could not configure sink k1 due to: No channel configured for sink: k1

org.apache.flume.conf.ConfigurationException: No channel configured for sink: k1

at org.apache.flume.conf.sink.SinkConfiguration.configure(SinkConfiguration.java:52)

at org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.validateSinks(FlumeConfiguration.java:867)

at org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.isValid(FlumeConfiguration.java:383)

at org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.access$000(FlumeConfiguration.java:228)

at org.apache.flume.conf.FlumeConfiguration.validateConfiguration(FlumeConfiguration.java:153)

at org.apache.flume.conf.FlumeConfiguration.<init>(FlumeConfiguration.java:133)

at org.apache.flume.node.PropertiesFileConfigurationProvider.getFlumeConfiguration(PropertiesFileConfigurationProvider.java:194)

at org.apache.flume.node.AbstractConfigurationProvider.getConfiguration(AbstractConfigurationProvider.java:97)

at org.apache.flume.node.PollingPropertiesFileConfigurationProvider$FileWatcherRunnable.run(PollingPropertiesFileConfigurationProvider.java:145)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2024-11-16 11:19:10,737 (conf-file-poller-0) [INFO - org.apache.flume.conf.FlumeConfiguration.validateConfiguration(FlumeConfiguration.java:163)] Post-validation flume configuration contains configuration for agents: [a1]

2024-11-16 11:19:10,737 (conf-file-poller-0) [INFO - org.apache.flume.node.AbstractConfigurationProvider.loadChannels(AbstractConfigurationProvider.java:151)] Creating channels

2024-11-16 11:19:10,741 (conf-file-poller-0) [INFO - org.apache.flume.channel.DefaultChannelFactory.create(DefaultChannelFactory.java:42)] Creating instance of channel c1 type memory

2024-11-16 11:19:10,744 (conf-file-poller-0) [INFO - org.apache.flume.node.AbstractConfigurationProvider.loadChannels(AbstractConfigurationProvider.java:205)] Created channel c1

2024-11-16 11:19:10,744 (conf-file-poller-0) [INFO - org.apache.flume.source.DefaultSourceFactory.create(DefaultSourceFactory.java:41)] Creating instance of source r1, type spooldir

2024-11-16 11:19:10,750 (conf-file-poller-0) [INFO - org.apache.flume.node.AbstractConfigurationProvider.getConfiguration(AbstractConfigurationProvider.java:120)] Channel c1 connected to [r1]

2024-11-16 11:19:10,751 (conf-file-poller-0) [INFO - org.apache.flume.node.Application.startAllComponents(Application.java:162)] Starting new configuration:{ sourceRunners:{r1=EventDrivenSourceRunner: { source:Spool Directory source r1: { spoolDir: C:/mylogs/ } }} sinkRunners:{} channels:{c1=org.apache.flume.channel.MemoryChannel{name: c1}} }

2024-11-16 11:19:10,752 (conf-file-poller-0) [INFO - org.apache.flume.node.Application.startAllComponents(Application.java:169)] Starting Channel c1

2024-11-16 11:19:10,777 (lifecycleSupervisor-1-0) [INFO - org.apache.flume.instrumentation.MonitoredCounterGroup.register(MonitoredCounterGroup.java:119)] Monitored counter group for type: CHANNEL, name: c1: Successfully registered new MBean.

2024-11-16 11:19:10,778 (lifecycleSupervisor-1-0) [INFO - org.apache.flume.instrumentation.MonitoredCounterGroup.start(MonitoredCounterGroup.java:95)] Component type: CHANNEL, name: c1 started

2024-11-16 11:19:10,778 (conf-file-poller-0) [INFO - org.apache.flume.node.Application.startAllComponents(Application.java:207)] Starting Source r1

2024-11-16 11:19:10,778 (lifecycleSupervisor-1-1) [INFO - org.apache.flume.source.SpoolDirectorySource.start(SpoolDirectorySource.java:85)] SpoolDirectorySource source starting with directory: C:/mylogs/

2024-11-16 11:19:11,022 (lifecycleSupervisor-1-1) [INFO - org.apache.flume.instrumentation.MonitoredCounterGroup.register(MonitoredCounterGroup.java:119)] Monitored counter group for type: SOURCE, name: r1: Successfully registered new MBean.

2024-11-16 11:19:11,022 (lifecycleSupervisor-1-1) [INFO - org.apache.flume.instrumentation.MonitoredCounterGroup.start(MonitoredCounterGroup.java:95)] Component type: SOURCE, name: r1 started

2024-11-16 11:19:27,505 (pool-3-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.readEvents(ReliableSpoolingFileEventReader.java:384)] Last read took us just up to a file boundary. Rolling to the next file, if there is one.

2024-11-16 11:19:27,506 (pool-3-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.rollCurrentFile(ReliableSpoolingFileEventReader.java:497)] Preparing to move file C:\mylogs\aa.txt to C:\mylogs\aa.txt.COMPLETED

2024-11-16 11:21:56,763 (pool-3-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.readEvents(ReliableSpoolingFileEventReader.java:384)] Last read took us just up to a file boundary. Rolling to the next file, if there is one.

2024-11-16 11:21:56,765 (pool-3-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.rollCurrentFile(ReliableSpoolingFileEventReader.java:497)] Preparing to move file C:\mylogs\bb.txt to C:\mylogs\bb.txt.COMPLETED

2024-11-16 11:28:18,154 (pool-3-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.readEvents(ReliableSpoolingFileEventReader.java:384)] Last read took us just up to a file boundary. Rolling to the next file, if there is one.

2024-11-16 11:28:18,155 (pool-3-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.rollCurrentFile(ReliableSpoolingFileEventReader.java:497)] Preparing to move file C:\mylogs\cc.txt to C:\mylogs\cc.txt.COMPLETED

2024-11-16 11:29:17,925 (pool-3-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.readEvents(ReliableSpoolingFileEventReader.java:384)] Last read took us just up to a file boundary. Rolling to the next file, if there is one.

2024-11-16 11:29:17,926 (pool-3-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.rollCurrentFile(ReliableSpoolingFileEventReader.java:497)] Preparing to move file C:\mylogs\dd.txt to C:\mylogs\dd.txt.COMPLETED

2024-11-16 11:33:25,717 (pool-3-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.readEvents(ReliableSpoolingFileEventReader.java:384)] Last read took us just up to a file boundary. Rolling to the next file, if there is one.

2024-11-16 11:33:25,718 (pool-3-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.rollCurrentFile(ReliableSpoolingFileEventReader.java:497)] Preparing to move file C:\mylogs\新建 文本文档.txt to C:\mylogs\新建 文本文档.txt.COMPLETED

2024-11-16 11:47:16,372 (pool-3-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.readEvents(ReliableSpoolingFileEventReader.java:384)] Last read took us just up to a file boundary. Rolling to the next file, if there is one.

2024-11-16 11:47:16,373 (pool-3-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.rollCurrentFile(ReliableSpoolingFileEventReader.java:497)] Preparing to move file C:\mylogs\gg.txt to C:\mylogs\gg.txt.COMPLETED

2024-11-16 11:55:46,365 (pool-3-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.readEvents(ReliableSpoolingFileEventReader.java:384)] Last read took us just up to a file boundary. Rolling to the next file, if there is one.

2024-11-16 11:55:46,365 (pool-3-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.rollCurrentFile(ReliableSpoolingFileEventReader.java:497)] Preparing to move file C:\mylogs\aa.txt to C:\mylogs\aa.txt.COMPLETED执行完①后没关终端,另开一个执行②结果报错,经分析,Flume 目前正通过 SpoolDirectorySource 从 C:\mylogs\ 目录中读取文件。这是一个常见的日志采集配置。日志中表明 Flume 正在处理文件,并且将它们重命名为 .COMPLETED 后缀。这意味着它已经成功读取了这些文件,并将它们标记为已处理。

example1.conf

# 定义三大组件名称

a1.sources = r1

a1.channels = c1

a1.sinks = k1

# 定义Source

a1.sources.r1.type = spooldir

a1.sources.r1.spoolDir = C:/mylogs/

# 定义Channel

a1.channels.c1.type = memory

a1.channels.c1.capacity = 10000

a1.channels.c1.transactionCapacity = 100

# 定义Sink

a1.sinks.k1.type = logger

# 组装Source、Channel、Sink

a1.sources.r1.channels = c1

a1.sinks.k1.channels = c1spooldir_hdfs.conf

#定义三大组件的名称

agent1.sources = source1

agent1.sinks = sink1

agent1.channels = channel1

# 配置source组件

agent1.sources.source1.type = spooldir

agent1.sources.source1.spoolDir = C:/mylogs/

agent1.sources.source1.fileHeader = false

#配置拦截器

#agent1.sources.source1.interceptors = i1

#agent1.sources.source1.interceptors.i1.type = host

#agent1.sources.source1.interceptors.i1.hostHeader = hostname

# 配置sink组件

agent1.sinks.sink1.type = hdfs

agent1.sinks.sink1.hdfs.path =hdfs://localhost:9000/weblog/%y-%m-%d/%H-%M

agent1.sinks.sink1.hdfs.filePrefix = access_log

agent1.sinks.sink1.hdfs.maxOpenFiles = 5000

agent1.sinks.sink1.hdfs.batchSize= 100

agent1.sinks.sink1.hdfs.fileType = DataStream

agent1.sinks.sink1.hdfs.writeFormat =Text

agent1.sinks.sink1.hdfs.rollSize = 102400

agent1.sinks.sink1.hdfs.rollCount = 1000000

agent1.sinks.sink1.hdfs.rollInterval = 60

#agent1.sinks.sink1.hdfs.round = true

#agent1.sinks.sink1.hdfs.roundValue = 10

#agent1.sinks.sink1.hdfs.roundUnit = minute

agent1.sinks.sink1.hdfs.useLocalTimeStamp = true

# Use a channel which buffers events in memory

agent1.channels.channel1.type = memory

agent1.channels.channel1.keep-alive = 120

agent1.channels.channel1.capacity = 500000

agent1.channels.channel1.transactionCapacity = 600

# Bind the source and sink to the channel

agent1.sources.source1.channels = channel1

agent1.sinks.sink1.channel = channel1所以是Flume按照example1.conf的配置进行的,缺少HDFS的相关配置等。

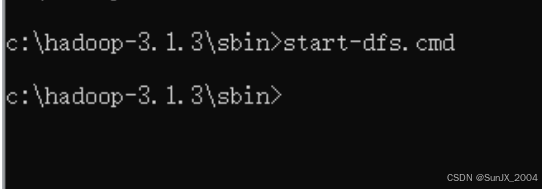

重新启动Hadoop、Flume:

将一个文件移动到C:\mylog\下,发现随即该文件名结尾被改为.COMPLETED,但是HDFS中找不到。查看Flume对应终端,发现报错:

2024-11-20 14:10:10,589 (pool-5-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.rollCurrentFile(ReliableSpoolingFileEventReader.java:497)] Preparing to move file C:\mylogs\cc.txt to C:\mylogs\cc.txt.COMPLETED

2024-11-20 14:10:10,595 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.hdfs.HDFSDataStream.configure(HDFSDataStream.java:57)] Serializer = TEXT, UseRawLocalFileSystem = false

2024-11-20 14:10:10,799 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.hdfs.BucketWriter.open(BucketWriter.java:246)] Creating hdfs://localhost:9000/weblog/24-11-20/14-10/access_log.1732083010595.tmp

2024-11-20 14:10:12,254 (SinkRunner-PollingRunner-DefaultSinkProcessor) [WARN - org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:454)] HDFS IO error

org.apache.hadoop.hdfs.server.namenode.SafeModeException: Cannot create file/weblog/24-11-20/14-10/access_log.1732083010595.tmp. Name node is in safe mode.

The reported blocks 1 needs additional 1 blocks to reach the threshold 0.9990 of total blocks 3.

The minimum number of live datanodes is not required. Safe mode will be turned off automatically once the thresholds have been reached. NamenodeHostName:localhost

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.newSafemodeException(FSNamesystem.java:1468)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkNameNodeSafeMode(FSNamesystem.java:1455)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInt(FSNamesystem.java:2429)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFile(FSNamesystem.java:2375)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.create(NameNodeRpcServer.java:791)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.create(ClientNamenodeProtocolServerSideTranslatorPB.java:469)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:527)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1036)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:1000)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:928)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2916)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:121)

at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:88)

at org.apache.hadoop.hdfs.DFSOutputStream.newStreamForCreate(DFSOutputStream.java:281)

at org.apache.hadoop.hdfs.DFSClient.create(DFSClient.java:1212)

at org.apache.hadoop.hdfs.DFSClient.create(DFSClient.java:1191)

at org.apache.hadoop.hdfs.DFSClient.create(DFSClient.java:1129)

at org.apache.hadoop.hdfs.DistributedFileSystem$8.doCall(DistributedFileSystem.java:531)

at org.apache.hadoop.hdfs.DistributedFileSystem$8.doCall(DistributedFileSystem.java:528)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.create(DistributedFileSystem.java:542)

at org.apache.hadoop.hdfs.DistributedFileSystem.create(DistributedFileSystem.java:469)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:1118)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:1098)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:987)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:975)

at org.apache.flume.sink.hdfs.HDFSDataStream.doOpen(HDFSDataStream.java:81)

at org.apache.flume.sink.hdfs.HDFSDataStream.open(HDFSDataStream.java:108)

at org.apache.flume.sink.hdfs.BucketWriter$1.call(BucketWriter.java:257)

at org.apache.flume.sink.hdfs.BucketWriter$1.call(BucketWriter.java:247)

at org.apache.flume.sink.hdfs.BucketWriter$8$1.run(BucketWriter.java:727)

at org.apache.flume.auth.SimpleAuthenticator.execute(SimpleAuthenticator.java:50)

at org.apache.flume.sink.hdfs.BucketWriter$8.call(BucketWriter.java:724)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.server.namenode.SafeModeException): Cannot create file/weblog/24-11-20/14-10/access_log.1732083010595.tmp. Name node is in safe mode.

The reported blocks 1 needs additional 1 blocks to reach the threshold 0.9990 of total blocks 3.

The minimum number of live datanodes is not required. Safe mode will be turned off automatically once the thresholds have been reached. NamenodeHostName:localhost

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.newSafemodeException(FSNamesystem.java:1468)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkNameNodeSafeMode(FSNamesystem.java:1455)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInt(FSNamesystem.java:2429)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFile(FSNamesystem.java:2375)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.create(NameNodeRpcServer.java:791)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.create(ClientNamenodeProtocolServerSideTranslatorPB.java:469)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:527)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1036)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:1000)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:928)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2916)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1545)

at org.apache.hadoop.ipc.Client.call(Client.java:1491)

at org.apache.hadoop.ipc.Client.call(Client.java:1388)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:233)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:118)

at com.sun.proxy.$Proxy13.create(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.create(ClientNamenodeProtocolTranslatorPB.java:366)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359)

at com.sun.proxy.$Proxy14.create(Unknown Source)

at org.apache.hadoop.hdfs.DFSOutputStream.newStreamForCreate(DFSOutputStream.java:276)

... 23 more从日志来看,问题的关键是 HDFS NameNode 处于 Safe Mode。在 Safe Mode 下,NameNode 会阻止任何写操作,直到它确认足够多的数据块副本已经存在于 Datanode 上并且集群处于健康状态。

解决方法:HDFS 报告 NameNode 处于安全模式,退出安全模式_namenode怎么离开安全模式-CSDN博客

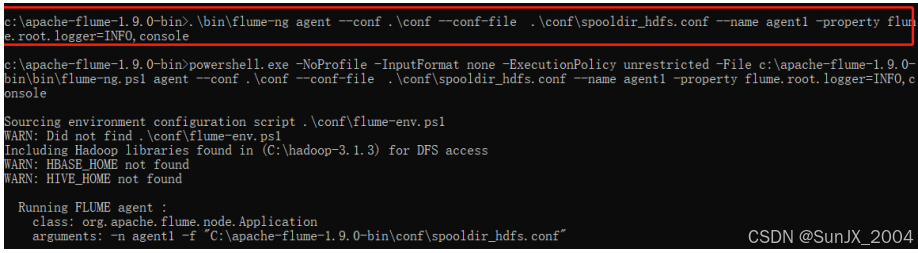

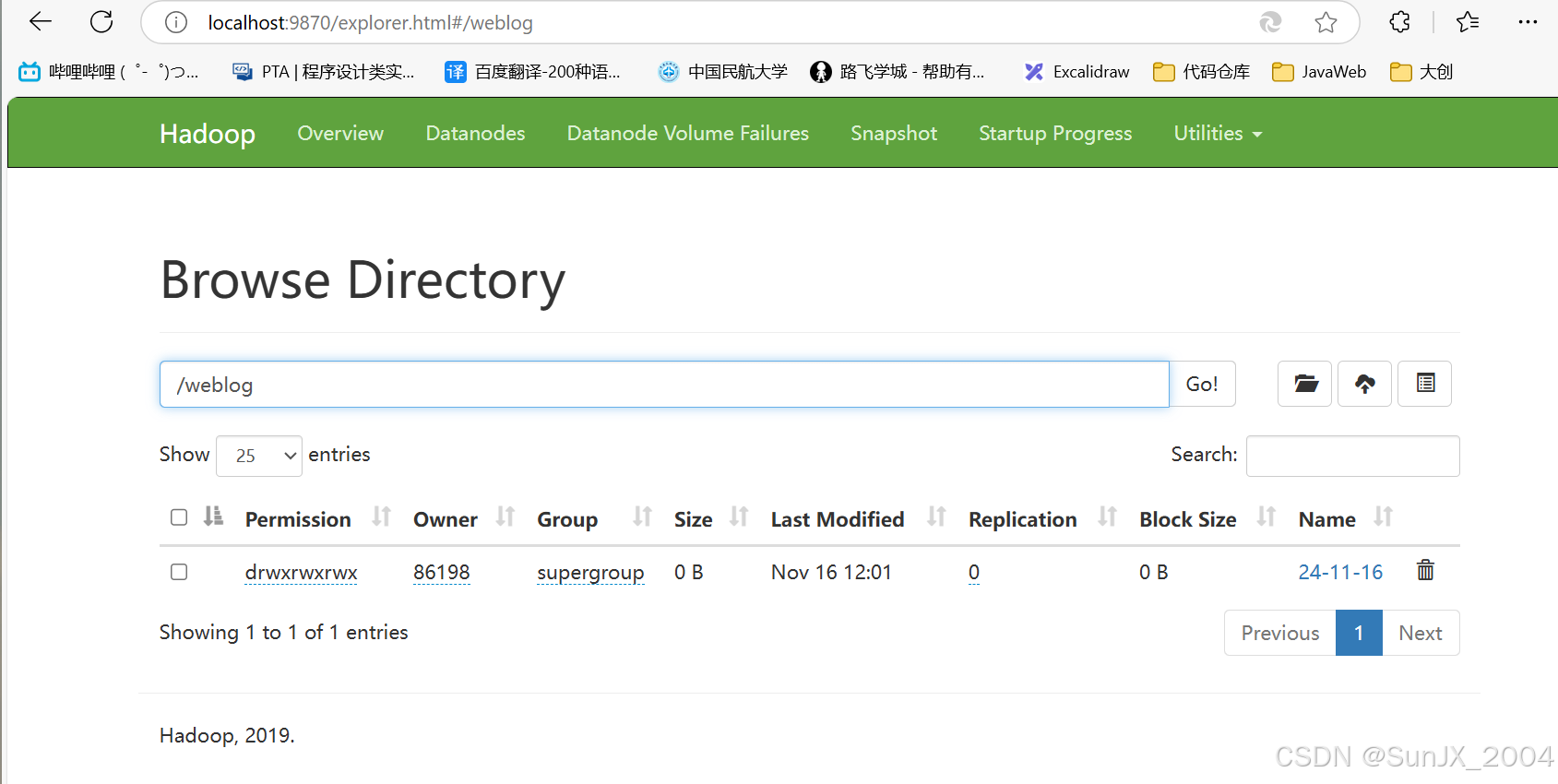

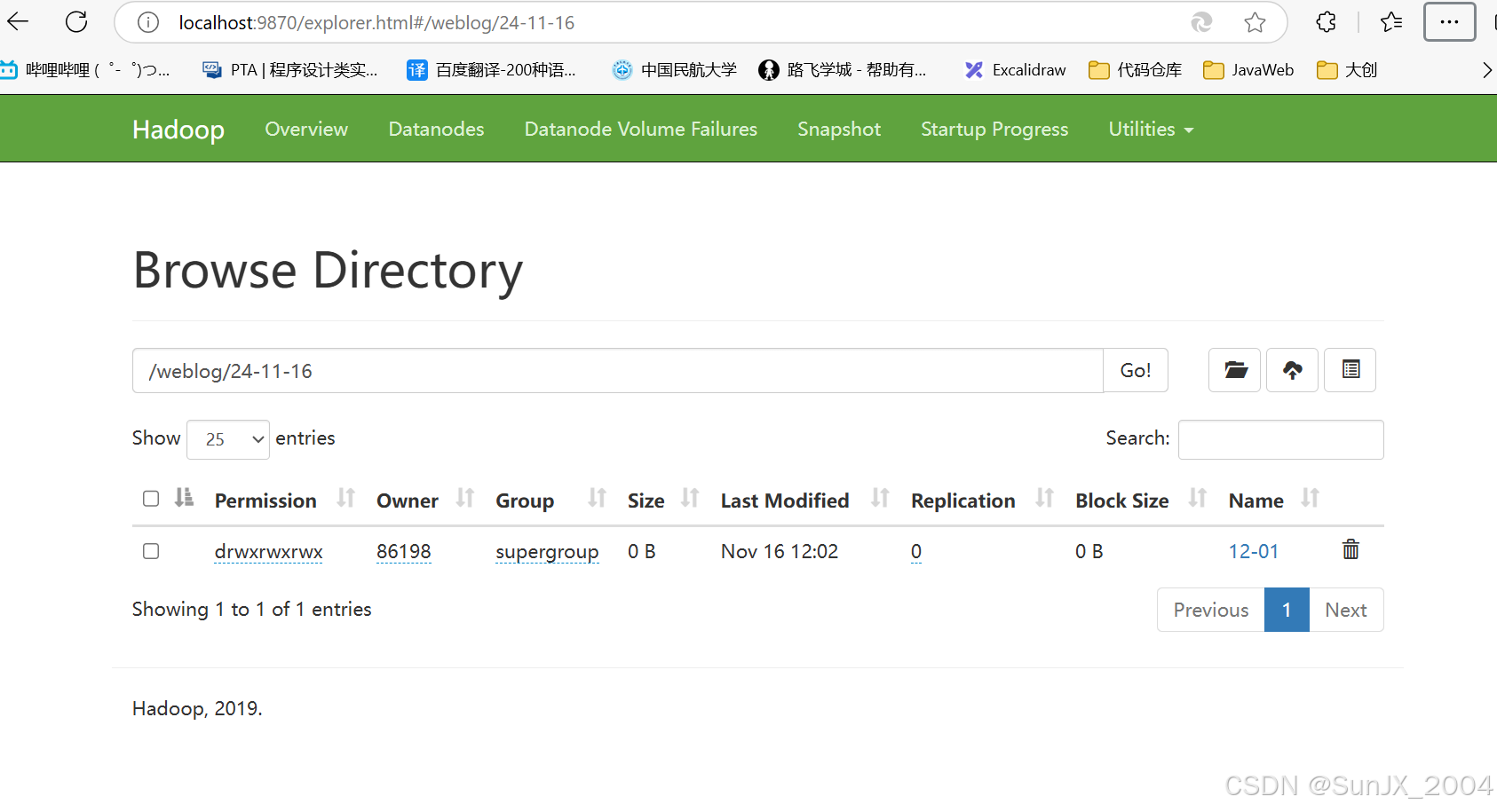

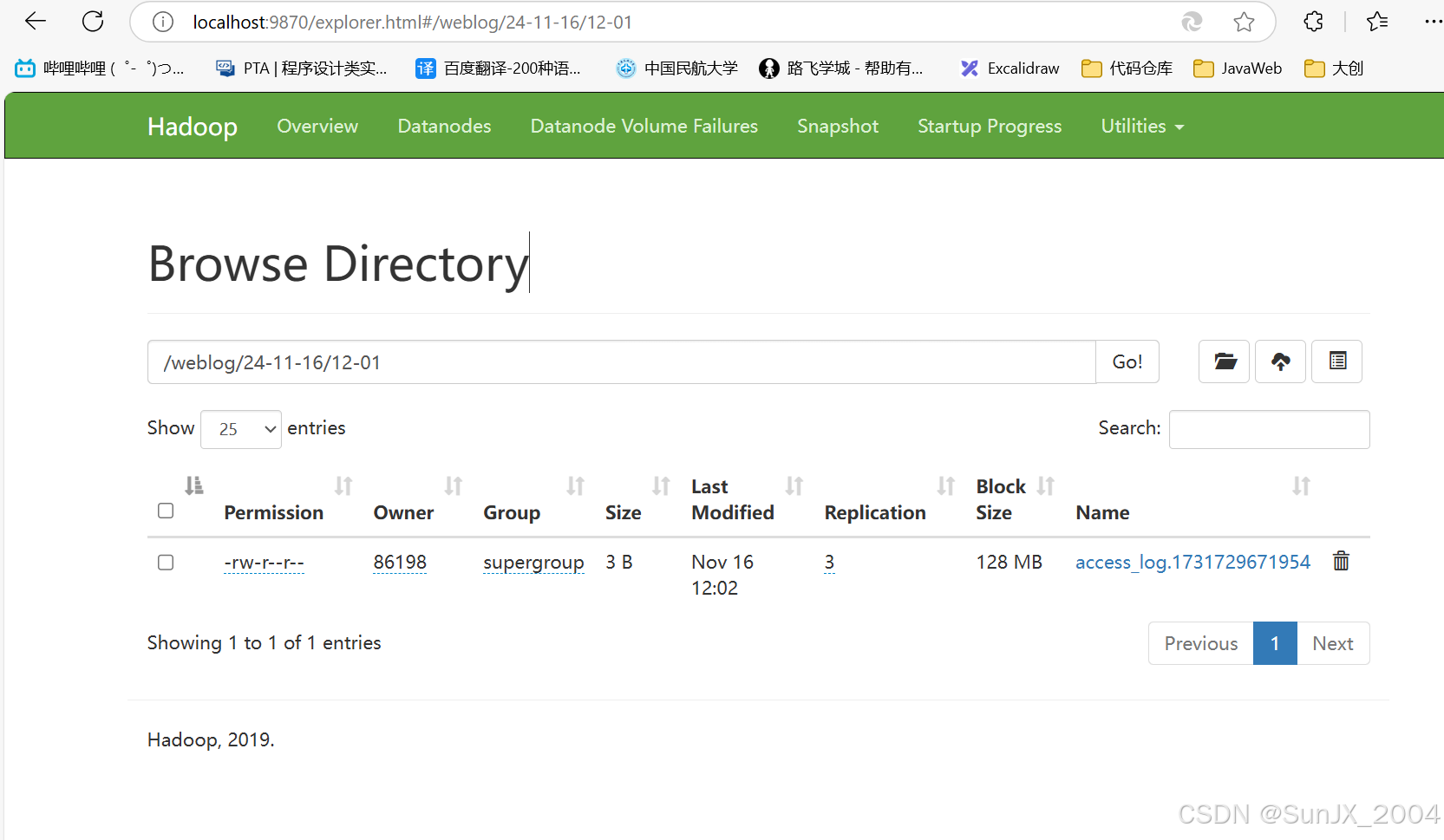

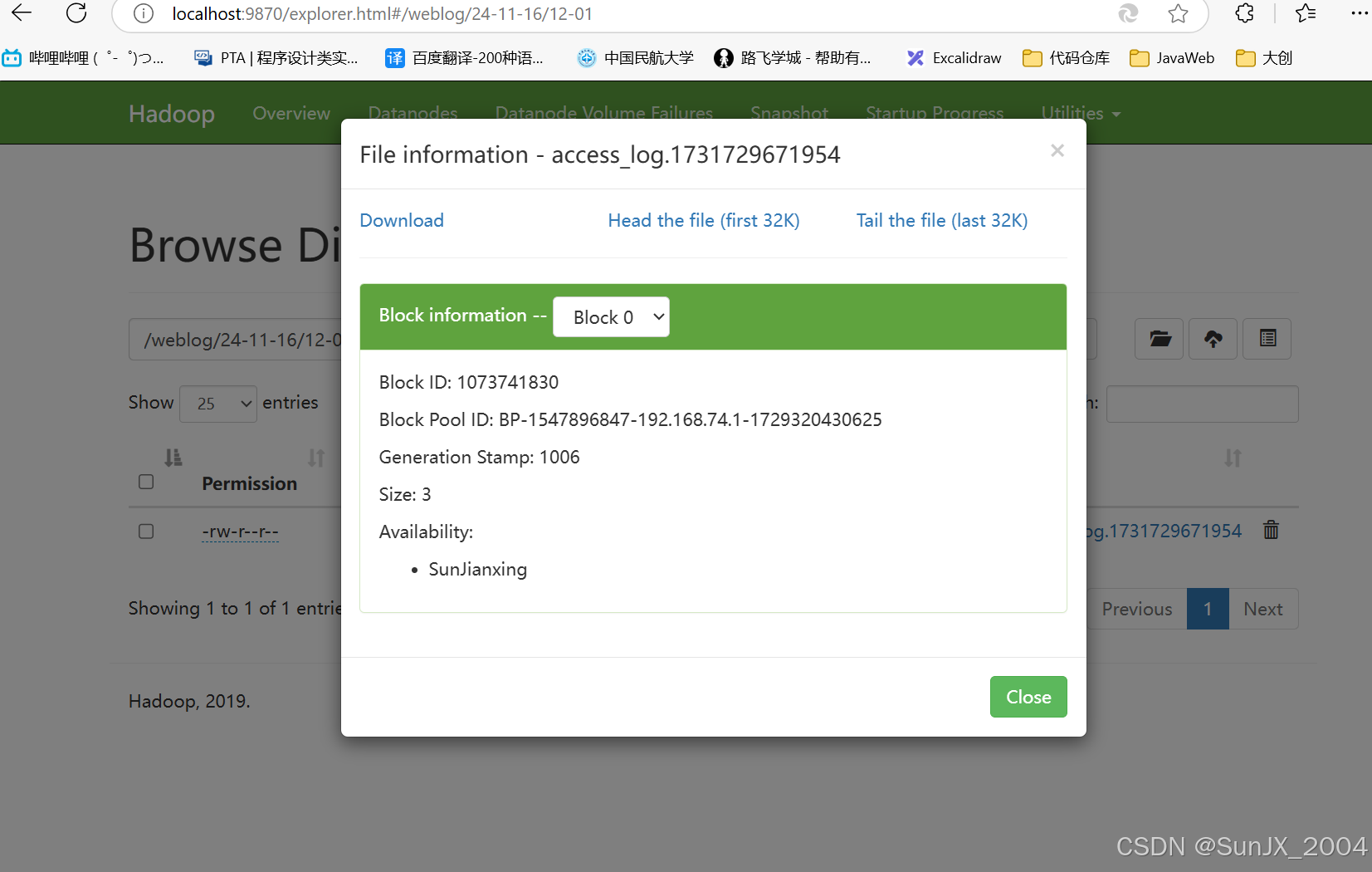

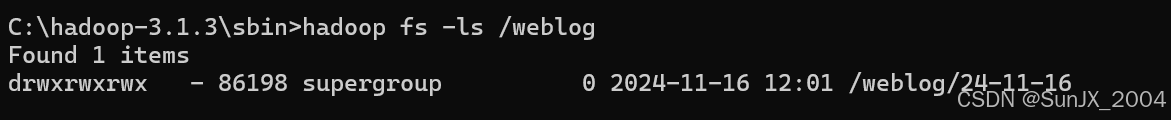

从按照example1.conf看到的结果理解了Flume的原理后,关闭Flume相应终端,重新打开一个终端,按照spooldir_hdfs.conf启动Flume,正常后查看HDFS

找到了此次操作的log文件。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)