Kerberos 认证配置&大数据组件配置kerberos

本文介绍了Kerberos认证的配置过程及大数据组件集成Kerberos的方法。主要内容包括:1)创建hdfs和HTTP的principals并生成hdfs.keytab文件;2)创建zookeeper.keytab文件用于ZK认证;3)ZK配置Kerberos的具体步骤,包括修改zoo.cfg、jaas.conf和java.env配置文件;4)配置同步和重启服务的操作流程;5)常见错误处理方法。

Kerberos 认证配置&大数据组件配置kerberos

前提

1.已经安装keberos 组件,以及相关其他组件:zk,hadoop,hbase,hive,flink,,spark,kafka

不是所有组件都要安装,根据自己需要

2.假设三台机器,hadoop01,hadoop02,hadoop03,它们之间ssh已打通

3.假设所有组件都安装在/opt目录下

创建证书

创建认证规则

为集群中每个服务器节点添加principals:hdfs、HTTP

[root@hadoop01 ~]# for i in {01..03}; do kadmin.local -q "addprinc -randkey hdfs/hadoop$i.jedy.com.cn@jedy.com.cn"; done

[root@hadoop01 ~]# for i in {01..03}; do kadmin.local -q "addprinc -randkey HTTP/hadoop$i.jedy.com.cn@jedy.com.cn"; done

[root@hadoop01 ~]# kadmin.local -q "listprincs"

创建hdfs.keytab文件

分别生成keytab文件:

[root@hadoop01 ~]# cd /var/kerberos/krb5kdc/

[root@hadoop01 krb5kdc]# for i in {01..03}; do kadmin.local -q "xst -k hdfs.keytab hdfs/hadoop$i.jedy.com.cn@jedy.com.cn"; done

[root@hadoop01 krb5kdc]# for i in {01..03}; do kadmin.local -q "xst -k hdfs.keytab HTTP/hadoop$i.jedy.com.cn@jedy.com.cn"; done

使用 klist 显示 hdfs.keytab 文件列表

[root@hadoop01 krb5kdc]# klist -ket hdfs.keytab

验证(无输出正常)

[root@hadoop01 krb5kdc]# kinit -k -t $HADOOP_HOME/etc/hadoop/hdfs.keytab hdfs/hadoop01.jedy.com.cn@jedy.com.cn

将hdfs.keytab 分发至hadoop配置目录,并赋权(hdfs用户,400)

[root@hadoop01 krb5kdc]# chmod 400 hdfs.keytab

[root@hadoop01 krb5kdc]# chown hdfs hdfs.keytab

[root@hadoop01 krb5kdc]#for i in {02..03}; do scp $HADOOP_HOME/etc/hadoop/hdfs.keytab hadoop$i:$HADOOP_HOME/etc/hadoop/hdfs.keytab; done

验证

[root@hadoop01 krb5kdc]# klist -ket $HADOOP_HOME/etc/hadoop/hdfs.keytab

创建zookeeper.keytab文件(用于zk)

hiveserver连接启用了kerberos的zookeeper时,要求zkconfig的jaas.conf中server配置段中Principal必须使用zookeeper用户主体

分别生成keytab文件:

[root@hadoop01 ~]# mkdir -P /etc/security/keytab/

[root@hadoop01 ~]# cd /etc/security/keytab/

[root@hadoop01 keytab]# for i in {01..03}; do kadmin.local -q "xst -k zookeeper.keytab zookeeper/hadoop$i.jedy.com.cn@jedy.com.cn"; done

使用 klist 显示 zookeeper.keytab 文件列表

[root@hadoop01 keytab]# klist -ket zookeeper.keytab

验证(无输出正常)

[root@hadoop01 keytab]# kinit -k -t /etc/security/keytab/zookeeper.keytab zookeeper/hadoop01.jedy.com.cn@jedy.com.cn

分发zookeeper.keytab

[root@hadoop01 keytab]#for i in {02..03}; do

ssh hadoop$i "mkdir -p /etc/security/keytab/";

scp zookeeper.keytab hadoop$i:/etc/security/keytab/hdfs.keytab; done

验证

[root@hadoop01 keytab]#for i in {02..03}; do

ssh hadoop$i "klist -ket /etc/security/keytab/zookeeper.keytab"

done

zk配置 kerberos

启用kerberos

zoo_hadoop.cfg(以hadoop实例为例)

#zk SASL

authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

#jaasLoginRenew=3600000

requireClientAuthScheme=sasl

zookeeper.sasl.client=true

kerberos.removeHostFromPrincipal=true

kerberos.removeRealmFromPrincipal=true

quorum.auth.enableSasl=true

quorum.auth.learner.saslLoginContext=Learner

quorum.auth.server.saslLoginContext=Server

quorum.auth.kerberos.servicePrincipal=hdfs/_HOST@jedy.com.cn

4lw.commands.whitelist=mntr,conf,ruok,cons

jaas.conf(注意各个zk节点不同)

这里有一个坑,zk的server认证中必须使用zookeeper主机名,否则hiveserver连接时报错认证失败

cat /opt/zookeeper/conf/jaas.conf

Server {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="/etc/security/keytab/zookeeper.keytab"

storeKey=true

useTicketCache=false

principal="zookeeper/hadoop01.jedy.com.cn@jedy.com.cn";

};

Client {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="/etc/security/keytab/zookeeper.keytab"

storeKey=true

useTicketCache=false

principal="zookeeper/hadoop01.jedy.com.cn@jedy.com.cn";

};

Learner {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="/opt/hadoop/etc/hadoop/hdfs.keytab"

storeKey=true

useTicketCache=false

principal="hdfs/hadoop01.jedy.com.cn@jedy.com.cn";

};

Server的principal 如果是多个zookeeper,那么这个principal里面的域名改成对应的主机名

同时在这个server里面,principal必须以zookeeper开头,也就是principal=‘zookeeper/hostname@jedy.com.cn’ 否则会报服务不能找到错误。

比如hadoop02机器的话,则替换文件中的hadooop01为hadoop02

java.env

cat /opt/zookeeper/conf/java.env

export SERVER_JVMFLAGS="-Djava.security.auth.login.config=/opt/zookeeper/conf/jaas.conf"

export CLIENT_JVMFLAGS="${CLIENT_JVMFLAGS} -Djava.security.auth.login.config=/opt/zookeeper/conf/jaas.conf -Djava.security.krb5.conf=/etc/krb5.conf -Dzookeeper.server.principal=zookeeper/$HOSTNAME@jedy.com.cn"

重启服务

同步配置

[root@hadoop01 zookeeper]#for i in {02..03}; do

scp {zoo_hadoop.cfg,jaas.conf,java.env} hadoop$i:/opt/zookeeper/conf/; done

#### 重启服务

```javascript

for n in stop start status

do

zkServer.sh $n /opt/zookeeper/conf/zoo_hadoop.cfg

done

错误处理

hdfs 主从切换报错:

kinit: KDC can’t fulfill requested option while renewing credentials

原因、解决方法:缓存问题,重新认证

rm -rf /tmp/krb5cc_*

kinit -kt $HADOOP_HOME/etc/hadoop/hdfs.keytab hdfs/$HOSTNAME@jedy.com.cn

klist

hadoop配置 kerberos(HTTPS方式)

创建HTTPS证书

Step 1 生成根CA证书

[root@hdp-node1 ~]# HADOOP_HOME=/opt/hadoop; mkdir $HADOOP_HOME/etc/hadoop/ssl ; cd $HADOOP_HOME/etc/hadoop/ssl

# 此命令成功后输出 hdfs_ca_cert、hdfs_ca_key文件

[root@hdp-node1 ssl]# openssl req -new -x509 -keyout hdfs_ca_key -out hdfs_ca_cert -days 9999 -subj /C=CN/ST=zhejiang/L=hangzhou/O=jy/OU=jy/CN=hadoop01.jedy.com.cn

# 密码M123456a

将得到的文件复制到其他机器上面.

scp -r $HADOOP_HOME/etc/hadoop/ssl root@xxx : $HADOOP_HOME/etc/hadoop/ssl

在每个节点上都依次执行以下命令

cd $HADOOP_HOME/etc/hadoop/ssl

# 所有需要输入密码的地方全部输入M123456a(方便起见,如果你对密码有要求请自行修改)

Step2 生成keystore(密钥库)

# 输入密码和确认密码:M123456a,

# 此命令成功后输出keystore文件

name="CN=$HOSTNAME, OU=jy, O=jy, L=hangzhou, ST=zhejiang, C=CN"

keytool -keystore keystore -alias localhost -validity 9999 -genkey -keyalg RSA -keysize 2048 -dname "$name" -keypass M123456a -storepass M123456a

Step 3 向truststore(信任库)添加CA

# 输入密码和确认密码:M123456a,提示是否信任证书:输入yes,

# 此命令成功后输出truststore文件

keytool -keystore truststore -alias CARoot -import -file hdfs_ca_cert -keypass M123456a -storepass M123456a

输入yes

Step 4 从keystore导出cert

# 输入密码和确认密码:M123456a,

# 此命令成功后输出cert文件

keytool -certreq -alias localhost -keystore keystore -file cert -keypass M123456a -storepass M123456a

Step 5 用CA对cert签名

# 此命令成功后,

# 输出cert_signed文件

openssl x509 -req -CA hdfs_ca_cert -CAkey hdfs_ca_key -in cert -out cert_signed -days 9999 -CAcreateserial -passin pass:M123456a

Step 6 将CA证书和用CA签名后的证书导入到keystore

# 5 输入密码和确认密码:M123456a,是否信任证书,输入yes,

# 此命令成功后更新keystore文件

keytool -keystore keystore -alias CARoot -import -file hdfs_ca_cert -keypass M123456a -storepass M123456a

输入yes

keytool -keystore keystore -alias localhost -import -file cert_signed -keypass M123456a -storepass M123456a

# 最终得到:

-rw-r--r-- 1 hdfs hadoop 1101 Jun 26 19:50 cert

-rw-r--r-- 1 hdfs hadoop 1224 Jun 26 19:50 cert_signed

-rw-r--r-- 1 hdfs root 1342 Jun 26 19:49 hdfs_ca_cert

-rw-r--r-- 1 hdfs hadoop 17 Jun 26 19:50 hdfs_ca_cert.srl

-rw-r--r-- 1 hdfs root 1834 Jun 26 19:49 hdfs_ca_key

-rw-r--r-- 1 hdfs hadoop 4159 Jun 26 19:52 keystore

-rw-r--r-- 1 hdfs hadoop 1012 Jun 26 19:50 truststore

配置HDFS启用HTTPS

hdfs-site.xml(新增)

<property>

<name>dfs.http.policy</name>

<value>HTTPS_ONLY</value>

<description>所有开启的web页面均使用https, 细节在ssl server 和client那个配置文件内配置</description>

</property>

ssl-server.xml(新增)

<configuration>

<!-- SSL密钥库中密钥的密码 -->

<property>

<name>ssl.server.keystore.keypassword</name>

<value>M123456a</value>

<description>Must be specified.</description>

</property>

<!-- SSL密钥库路径 -->

<property>

<name>ssl.server.keystore.location</name>

<value>/opt/hadoop/etc/hadoop/ssl/keystore</value>

<description>Keystore to be used by NN and DN. Must be specified.</description>

</property>

<!-- SSL密钥库密码 -->

<property>

<name>ssl.server.keystore.password</name>

<value>M123456a</value>

<description>Must be specified.</description>

</property>

<!-- SSL可信任密钥库路径 -->

<property>

<name>ssl.server.truststore.location</name>

<value>/opt/hadoop/etc/hadoop/ssl/truststore</value>

<description>Truststore to be used by NN and DN. Must be specified.</description>

</property>

<!-- SSL可信任密钥库密码 -->

<property>

<name>ssl.server.truststore.password</name>

<value>M123456a</value>

<description>Optional. Default value is "". </description>

</property>

</configuration>

ssl-client.xml(新增)

<configuration>

<!-- SSL密钥库中密钥的密码 -->

<property>

<name>ssl.client.keystore.keypassword</name>

<value>M123456a</value>

<description>Optional. Default value is "". </description>

</property>

<!-- SSL密钥库路径 -->

<property>

<name>ssl.client.keystore.location</name>

<value>/opt/hadoop/etc/hadoop/ssl/keystore</value>

<description>Keystore to be used by clients like distcp. Must be specified. </description>

</property>

<!-- SSL密钥库密码 -->

<property>

<name>ssl.client.keystore.password</name>

<value>M123456a</value>

<description>Optional. Default value is "". </description>

</property>

<!-- SSL可信任密钥库路径 -->

<property>

<name>ssl.client.truststore.location</name>

<value>/opt/hadoop/etc/hadoop/ssl/truststore</value>

<description>Truststore to be used by clients like distcp. Must be specified. </description>

</property>

<!-- SSL可信任密钥库密码 -->

<property>

<name>ssl.client.truststore.password</name>

<value>M123456a</value>

<description>Optional. Default value is "". </description>

</property>

</configuration>

hadoop配置kerberos

hadoop_env.sh(新增)

export HADOOP_OPTS="$HADOOP_OPTS -Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.krb5.realm=jedy.com.cn -Djava.security.krb5.kdc=hadoop01.jedy.com.cn:88"

core-site.xml(新增)

<property>

<name>hadoop.security.authorization</name>

<value>true</value>

<description>是否开启hadoop的安全认证</description>

</property>

<property>

<name>hadoop.security.authentication</name>

<value>kerberos</value>

<description>使用kerberos作为hadoop的安全认证方案</description>

</property>

hdfs-site.xml(新增)

<!-- kerberos start -->

<!-- NameNode security config -->

<property>

<name>dfs.namenode.keytab.file</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

<description>因为使用-randkey 创建的用户 密码随机不知道,所以需要用免密登录的keytab文件 指定namenode需要用的keytab文件在哪里</description>

</property>

<property>

<name>dfs.namenode.kerberos.principal</name>

<value>hdfs/_HOST@jedy.com.cn</value>

</property>

<property>

<name>dfs.namenode.kerberos.https.principal</name>

<value>hdfs/_HOST@jedy.com.cn</value>

</property>

<property>

<name>dfs.namenode.kerberos.internal.spnego.principal</name>

<value>HTTP/_HOST@jedy.com.cn</value>

<description>https 相关(如开启namenodeUI)使用的账户</description>

</property>

<!-- JournalNode security config -->

<property>

<name>dfs.journalnode.keytab.file</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

</property>

<property>

<name>dfs.journalnode.kerberos.principal</name>

<value>hdfs/_HOST@jedy.com.cn</value>

</property>

<property>

<name>dfs.journalnode.kerberos.internal.spnego.principal</name>

<value>HTTP/_HOST@jedy.com.cn</value>

</property>

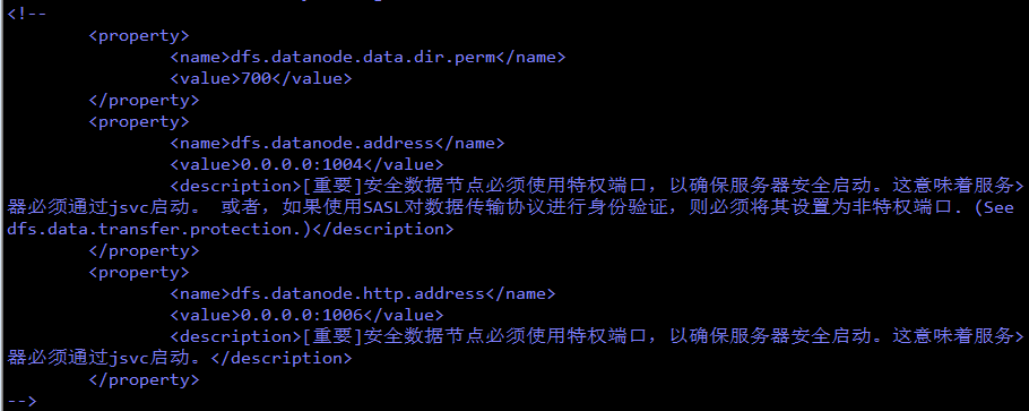

<!-- DataNode security config -->

<property>

<name>dfs.datanode.data.dir.perm</name>

<value>700</value>

</property>

<property>

<name>dfs.data.transfer.protection</name>

<value>integrity</value>

<description>如果使用HTTPS,则必须加,不然DN启不来,如果使用JSVC 是不允许加的</description>

</property>

<property>

<name>dfs.datanode.kerberos.principal</name>

<value>hdfs/_HOST@jedy.com.cn</value>

<description>datanode用到的账户</description>

</property>

<property>

<name>dfs.datanode.keytab.file</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

<description>datanode用到的keytab文件路径</description>

</property>

<property>

<name>dfs.block.access.token.enable</name>

<value>true</value>

<description>Enable HDFS block access tokens for secure operations</description>

</property>

<!-- WebHDFS security config -->

<property>

<name>dfs.web.authentication.kerberos.principal</name>

<value>HTTP/_HOST@jedy.com.cn</value>

<description>web hdfs 使用的账户</description>

</property>

<property>

<name>dfs.web.authentication.kerberos.keytab</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

<description>对应的keytab文件</description>

</property>

<!-- kerberos end-->

yarn-site.xml(新增)

<!-- kerberos start -->

<!-- resourcemanager -->

<property>

<name>yarn.web-proxy.principal</name>

<value>HTTP/_HOST@jedy.com.cn</value>

</property>

<property>

<name>yarn.web-proxy.keytab</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

</property>

<property>

<name>yarn.resourcemanager.principal</name>

<value>hdfs/_HOST@jedy.com.cn</value>

</property>

<property>

<name>yarn.resourcemanager.keytab</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

</property>

<!-- nodemanager -->

<property>

<name>yarn.nodemanager.principal</name>

<value>hdfs/_HOST@jedy.com.cn</value>

</property>

<property>

<name>yarn.nodemanager.keytab</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

</property>

<property>

<name>yarn.nodemanager.container-executor.class</name>

<value>org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor</value>

</property>

<property>

<name>yarn.nodemanager.linux-container-executor.group</name>

<value>hdfs</value>

</property>

<property>

<name>yarn.nodemanager.linux-container-executor.path</name>

<value>/opt/hadoop/bin/container-executor</value>

</property>

<!-- timeline kerberos -->

<property>

<name>yarn.timeline-service.http-authentication.type</name>

<value>kerberos</value>

<description>Defines authentication used for the timeline server HTTP endpoint. Supported values are: simple | kerberos | #AUTHENTICATION_HANDLER_CLASSNAME#</description>

</property>

<property>

<name>yarn.timeline-service.principal</name>

<value>hdfs/_HOST@jedy.com.cn</value>

</property>

<property>

<name>yarn.timeline-service.keytab</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

</property>

<property>

<name>yarn.timeline-service.http-authentication.kerberos.principal</name>

<value>HTTP/_HOST@jedy.com.cn</value>

</property>

<property>

<name>yarn.timeline-service.http-authentication.kerberos.keytab</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

</property>

<property>

<name>yarn.nodemanager.container-localizer.java.opts</name>

<value>-Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.krb5.realm=jedy.com.cn -Djava.security.krb5.kdc=hadoop01.jedy.com.cn:88</value>

</property>

<property>

<name>yarn.nodemanager.health-checker.script.opts</name>

<value>-Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.krb5.realm=jedy.com.cn -Djava.security.krb5.kdc=hadoop01.jedy.com.cn:88</value>

</property>

<!-- kerberos end -->

mapred-site.xml(新增)

<!-- kerberos start -->

<!-- mapreduce -->

<property>

<name>mapreduce.map.java.opts</name>

<value>-Xmx1638M -Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.krb5.realm=jedy.com.cn -Djava.security.krb5.kdc=hadoop01.jedy.com.cn:88</value>

</property>

<property>

<name>mapreduce.reduce.java.opts</name>

<value>-Xmx3276M -Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.krb5.realm=jedy.com.cn -Djava.security.krb5.kdc=hadoop01.jedy.com.cn:88</value>

</property>

<property>

<name>mapreduce.jobhistory.keytab</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

</property>

<property>

<name>mapreduce.jobhistory.principal</name>

<value>hdfs/_HOST@jedy.com.cn</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.spnego-keytab-file</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.spnego-principal</name>

<value>HTTP/_HOST@jedy.com.cn</value>

</property>

<property>

<name>mapred.child.java.opts</name>

<value>-Xmx1024m -Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.krb5.realm=jedy.com.cn -Djava.security.krb5.kdc=hadoop01.jedy.com.cn:88</value>

</property>

<property>

<name>yarn.app.mapreduce.am.command-opts</name>

<value>-Xmx3276m -Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.krb5.realm=jedy.com.cn -Djava.security.krb5.kdc=hadoop01.jedy.com.cn:88</value>

</property>

<!-- kerberos end -->

container-executor.cfg

#configured value of yarn.nodemanager.linux-container-executor.group

yarn.nodemanager.linux-container-executor.group=hdfs

#comma separated list of users who can not run applications

#banned.users 禁止提交的用户,若为空,拒绝所有用户

banned.users=bin

#Prevent other super-users

min.user.id=1000

##comma separated list of system users who CAN run applications

allowed.system.users=

feature.tc.enabled=false

配置Yarn使用LinuxContainerExecutor(hadoop各个节点都执行)

yum install openssl11-libs

ldd $HADOOP_HOME/bin/container-executor

cd /opt/hadoop/

chown root:hdfs .

chown root:hdfs etc

chown root:hdfs etc/hadoop

chown root:hdfs etc/hadoop/container-executor.cfg

chown root:hdfs bin/container-executor

chmod 6050 bin/container-executor

/opt/hadoop/bin/container-executor --checksetup

没有输出就正常

重启服务

分发配置文件

[root@hadoop01 hadoop]#pwd

/opt/hadoop/etc/hadoop

[root@hadoop01 hadoop]#for i in {02..03}; do

scp {hdfs-site.xml,ssl-server.xml,ssl-client.xml,hadoop_env.sh,core-site.xml,yarn-site.xml,mapred-site.xml,container-executor.cfg} hadoop$i:/opt/hadoop/etc/hadoop; done

下面hadoop 各节点都执行:1~4

1.切换到启动用户,进行认证

[root@hadoopn ~]#su - hdfs

[hdfs@hadoopn ~]$kinit -kt $HADOOP_HOME/etc/hadoop/hdfs.keytab hdfs/$HOSTNAME@jedy.com.cn

klist

2.增加定时任务,认证key

1 */1 * * * kinit -kt /opt/hadoop/etc/hadoop/hdfs.keytab hdfs/$HOSTNAME@jedy.com.cn

3.依次重启hdfs服务

hdfs --daemon stop datanode

hdfs --daemon stop namenode

hdfs --daemon stop journalnode

hdfs --daemon stop zkfc

hdfs --daemon start zkfc

hdfs --daemon start journalnode

hdfs --daemon start namenode

hdfs --daemon start datanode

停启对应安装的组件,比如没装datanode的,就不用执行datanode停启命令

4.依次 重启yarn服务

yarn --daemon stop nodemanager

yarn --daemon stop resourcemanager

yarn --daemon start resourcemanager

yarn --daemon start nodemanager

访问Web UI

namenode

https://hadoop01.jedy.com.cn:9871/

https://hadoop02.jedy.com.cn:9871/

resourcemanager

http://hadoop01.jedy.com.cn:8088/

http://hadoop02.jedy.com.cn:8088/

historyserver

http://hadoop01.jedy.com.cn:8088/

错误处理

namenode 无法启动,报错

Failed on local exception: java.io.IOException: Couldn't set up IO streams: java.lang.IllegalArgumentException: Server has invalid Kerberos principal: hdfs/hadoop01.jedy.com.cn@jedy.com.cn, expecting: hdfs/172.31.52.81@jedy.com.cn

原因、解决方法:

虽然使用dns解析主机名,但可能是因为没有反向解析的原因,不能识别主机名

必须增加hosts文件。

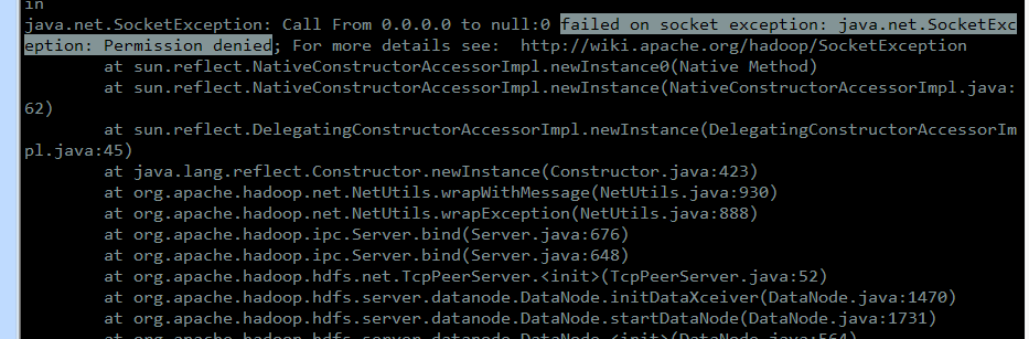

dn 无法启动,报错

failed on socket exception: java.net.SocketException: Permission denied

原因、解决方法:

参照网上文档,dn指定了1024以下的端口。改掉就行,1024以下的端口是使用jsvc方式的,使用https方式时就不能用了

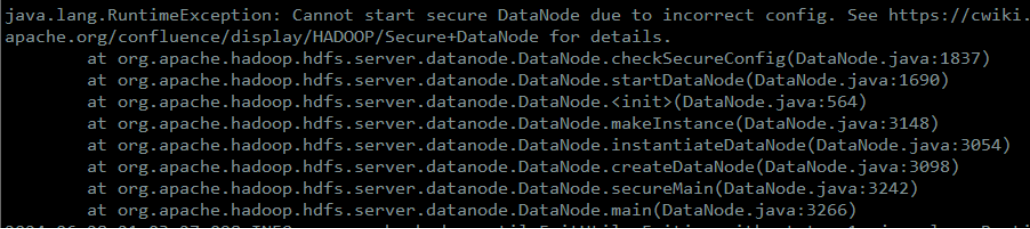

dn 无法启动,报Cannot start secure DataNode due to incorrect config

原因、解决方法:

参照网上文档,有坑,dn 使用https方式必须加上

<property>

<name>dfs.data.transfer.protection</name>

<value>integrity</value>

<description>如果使用HTTPS,则必须加,不然DN启不来,如果使用JSVC 是不允许加的</description>

</property>

hdfs用户yarn任务执行不了,报错

Failing this attempt.Diagnostics: [2024-07-18 23:53:24.887]Application application_1721317497304_0004 initialization failed (exitCode=255) with output: main : command provided 0

main : run as user is hdfs

main : requested yarn user is hdfs

Requested user hdfs is banned

For more detailed output, check the application tracking page: http://hadoop01.jedy.com.cn:8088/cluster/app/application_1721317497304_0004 Then click on links to logs of each attempt.

. Failing the application.

24/07/18 23:53:25 INFO mapreduce.Job: Counters: 0

Job job_1721317497304_0004 failed!

原因、解决方法:

container-executor.cfg 文件中banned.users 配置问题,

banned.users不得为空,禁止提交的用户,若为空,拒绝所有用户

#configured value of yarn.nodemanager.linux-container-executor.group

yarn.nodemanager.linux-container-executor.group=hdfs

#comma separated list of users who can not run applications

#banned.users 禁止提交的用户,若为空,拒绝所有用户

banned.users=bin

#Prevent other super-users

min.user.id=1000

##comma separated list of system users who CAN run applications

allowed.system.users=

feature.tc.enabled=false

新用户yarn任务执行不了,报错

main : run as user is crayonshin

main : requested yarn user is crayonshin

User crayonshin not found

原因、解决方法(即新增用户方式):

for node in hadoop{01…03}; do

ssh $node “sudo useradd -M leichenlei.local -s /sbin/nologin”

done

hdfs 上创建用户目录并赋权

hdfs dfs -mkdir /user/leichenlei.local

hdfs dfs -chown leichenlei.local /user/leichenlei.local

kerberos KDC上创建用户认证,不用导出

for i in {01…03}; do kadmin.local -q “addprinc -randkey leichenlei.local/hadoop$i.jedy.com.cn@jedy.com.cn”; done

kerberos KDC上为客户机创建用户认证文件

导出客户机认证文件至客户机上,并加载

kerberos认证场景下:

HADOOP_USER_NAME不再有效,所有任何提交的用户以kerberos认证用户提交(klist中存在)

用户不再依赖于core-site.xml

新增用户时以下刷新集群配置的无需操作:

yarn rmadmin -refreshUserToGroupsMappings

yarn rmadmin -refreshSuperUserGroupsConfiguration

hdfs dfsadmin -refreshSuperUserGroupsConfiguration

hdfs dfsadmin -refreshUserToGroupsMappings

hbase配置 kerberos

启用kerberos

hbase-site.xml(新增)

[root@hadoop01 ~]# cd /opt/habase/conf

[root@hadoop01 habse ]# vi hbase-site.xml

<!-- hbase配置kerberos安全认证start -->

<property>

<name>hbase.security.authentication</name>

<value>kerberos</value>

</property>

<!-- 配置hbase rpc安全通信 -->

<property>

<name>hbase.rpc.engine</name>

<value>org.apache.hadoop.hbase.ipc.SecureRpcEngine</value>

</property>

<!-- hmaster配置kerberos安全凭据认证 -->

<property>

<name>hbase.master.kerberos.principal</name>

<value>hdfs/_HOST@jedy.com.cn</value>

</property>

<!-- hmaster配置kerberos安全证书keytab文件位置 -->

<property>

<name>hbase.master.keytab.file</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

</property>

<!-- regionserver配置kerberos安全凭据认证 -->

<property>

<name>hbase.regionserver.kerberos.principal</name>

<value>hdfs/_HOST@jedy.com.cn</value>

</property>

<!-- regionserver配置kerberos安全证书keytab文件位置 -->

<property>

<name>hbase.regionserver.keytab.file</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

</property>

<!--

<property>

<name>hbase.thrift.keytab.file</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

</property>

<property>

<name>hbase.thrift.kerberos.principal</name>

<value>hdfs/_HOST@jedy.com.cn</value>

</property>

<property>

<name>hbase.rest.keytab.file</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

</property>

<property>

<name>hbase.rest.kerberos.principal</name>

<value>hdfs/_HOST@jedy.com.cn</value>

</property>

<property>

<name>hbase.rest.authentication.type</name>

<value>kerberos</value>

</property>

<property>

<name>hbase.rest.authentication.kerberos.principal</name>

<value>hdfs/_HOST@jedy.com.cn</value>

</property>

<property>

<name>hbase.rest.authentication.kerberos.keytab</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

</property>

-->

<!-- hbase配置kerberos安全认证end -->

重启服务

同步文件

[root@hadoop01 habse ]#for i in {02..03}; do

scp hbase-site.xml hadoop$i:/opt/hbase/conf; done

切换到启动用户,进行认证(hadoop01,hadoop02,hadoop03执行)

[root@hadoopN habse]# su -hdfs

[hdfs@hadoopN habse]$kinit -kt /opt/hadoop/etc/hadoop/hdfs.keytab hdfs/$HOSTNAME@jedy.com.cn

klist

依次重启服务(hadoop01,hadoop02,hadoop03执行)

hbase-daemon.sh stop regionserver

hbase-daemon.sh stop master

hbase-daemon.sh start master

hbase-daemon.sh start regionserver

访问Web UI

http://hadoop01.jedy.com.cn:16010/

hive配置 kerberos

启用kerberos

hive-site.xml(新增)

[root@hadoop01 habse]# cd /opt/hive/conf

[root@hadoop01 conf]# vi hive-site.xml

<!-- hive配置kerberos安全认证start -->

<!--hiveserver2-->

<property>

<name>hive.server2.authentication</name>

<value>KERBEROS</value>

</property>

<property>

<name>hive.server2.authentication.kerberos.principal</name>

<value>hdfs/_HOST@jedy.com.cn</value>

</property>

<property>

<name>hive.server2.authentication.kerberos.keytab</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

</property>

<!-- metastore -->

<property>

<name>hive.metastore.sasl.enabled</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.kerberos.keytab.file</name>

<value>/opt/hadoop/etc/hadoop/hdfs.keytab</value>

</property>

<property>

<name>hive.metastore.kerberos.principal</name>

<value>hdfs/_HOST@jedy.com.cn</value>

</property>

<!-- hive配置kerberos安全认证end -->

启动hive meta

[root@hadoop01 conf]# su - hdfs

[hdfs@hadoop01 ~]$ cd /opt/hive/bin

[hdfs@hadoop01 bin]$ nohup ./bin/hive --service metastore &

启动hive server

[hdfs@hadoop01 bin]$nohup ./bin/hive --service hiveserver2 &

验证

hive

[hdfs@hadoop01 ~]$ hive

hive> show databases;

OK

default

Time taken: 0.914 seconds, Fetched: 1 row(s)

hive> show tables;

OK

Time taken: 0.33 seconds

hive>

beeline(直连)

[hdfs@hadoop01 ~]$ beeline

……

beeline> !connect jdbc:hive2://hadoop01:10000/default;principal=hdfs/hadoop01.jedy.com.cn@jedy.com.cn

……

0: jdbc:hive2://hadoop01:10000/default> show databese;

……

+----------------+

| database_name |

+----------------+

| default |

+----------------+

1 row selected (0.349 seconds)

0: jdbc:hive2://hadoop01:10000/default> show tables;

……

+-----------+

| tab_name |

+-----------+

+-----------+

No rows selected (0.084 seconds)

0: jdbc:hive2://hadoop01:10000/default> !quit

beeline(负载)

[hdfs@hadoop01 ~]$ beeline

……

beeline> !connect jdbc:hive2://hadoop01.jedy.com.cn:12131,hadoop02.jedy.com.cn:12131,hadoop03.jedy.com.cn:12131/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2;principal=hdfs/hadoop02.jedy.com.cn@jedy.com.cn

……

0: jdbc:hive2://hadoop01.jedy.com.cn:12131> show databese;

……

+----------------+

| database_name |

+----------------+

| default |

+----------------+

1 row selected (0.349 seconds)

0: jdbc:hive2://hadoop01.jedy.com.cn:12131> show tables;

……

+-----------+

| tab_name |

+-----------+

+-----------+

No rows selected (0.084 seconds)

0: jdbc:hive2://hadoop01.jedy.com.cn:12131> !quit

错误处理

- 配置zk负载后hiveserver2 启动报错:

KeeperErrorcode = AuthEailed for /hiveseryer2

原因:

hiveserver与zookeeper的认证错误,kerberos认证必须用zookeeper用户,这个可能是一个bug

解决方法:

jaas.conf文件中的server里面,principal必须以zookeeper开头

flink配置 kerberos

启用kerberos

flink-conf.yaml(新增)

security.kerberos.login.use-ticket-cache: true

security.kerberos.login.keytab: /opt/hadoop/etc/hadoop/hdfs.keytab

security.kerberos.login.principal: hdfs/hadoop01.jedy.com.cn@jedy.com.cn

security.kerberos.login.contexts: Client

spark配置 kerberos

配置

spark的kerberos不需要配置,只需要保证hdfs的kerberos配置正确即可

保证使用hdfs的用户已经验证,且本地有缓存,或者指定keytab文件也可以

验证

切换到启动用户hdfs,进行认证

su - hdfs

kinit -kt $HADOOP_HOME/etc/hadoop/hdfs.keytab hdfs/$HOSTNAME@jedy.com.cn

klist

spark-shell

[hdfs@hadoop02 spark]$ ./bin/spark-shell

scala> var file = "/test/hdfs-hdfs-audit.log"

file: String = /test/hdfs-hdfs-audit.log

scala> spark.read.textFile(file).flatMap(_.split(" ")).collect

提交作业到集群

[hdfs@hadoop02 spark]$ ./bin/spark-submit \

--principal hdfs/hadoop02.jedy.com.cn@jedy.com.cn \

--keytab /opt/hadoop/etc/hadoop/hdfs.keytab \

--class org.apache.spark.examples.SparkPi \

--master spark://hadoop02.jedy.com.cn:7077,hadoop03.jedy.com.cn:7077 \

$SPARK_HOME/examples/jars/spark-examples_2.12-3.5.1.jar \

10

提交作业到Yarn

[hdfs@hadoop02 spark]$ ./bin/spark-submit \

--principal hdfs/hadoop02.jedy.com.cn@jedy.com.cn \

--keytab /opt/hadoop/etc/hadoop/hdfs.keytab \

--class org.apache.spark.examples.SparkPi \

--master yarn \

$SPARK_HOME/examples/jars/spark-examples_2.12-3.5.1.jar \

10

kafka配置 kerberos

修改配置文件

config/kraft/server.properties(修改)

# kerberos

{%- set hostname = grains['localhost'] -%}

#{{hostname}}为对应主机名称

注释

#inter.broker.listener.name=PLAINTEXT

#listeners=PLAINTEXT://{{hostname}}:9092,CONTROLLER://{{hostname}}:9093

#advertised.listeners=PLAINTEXT://{{hostname}}:9092

新增

listeners=SASL_PLAINTEXT://{{hostname}}:9092,CONTROLLER://{{hostname}}:9093

advertised.listeners=SASL_PLAINTEXT://{{hostname}}:9092

security.inter.broker.protocol=SASL_PLAINTEXT

sasl.mechanism.inter.broker.protocol=GSSAPI

sasl.enabled.mechanisms=GSSAPI

sasl.kerberos.service.name=kafka

config/kraft/server.properties全文如下

[root@hadoop01 kraft]# cat server.properties | grep -vE '^$|^#'

{%- set node_id = pillar['kafka_cluster']['broker_id'][grains['host']] -%}

process.roles=broker,controller

node.id={{node_id}}

controller.quorum.voters=4@hadoop01:9093,5@hadoop02:9093,6@hadoop03:9093

listeners=SASL_PLAINTEXT://{{hostname}}:9092,CONTROLLER://{{hostname}}:9093

advertised.listeners=SASL_PLAINTEXT://{{hostname}}:9092

controller.listener.names=CONTROLLER

listener.security.protocol.map=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/data/store/kafka

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

security.inter.broker.protocol=SASL_PLAINTEXT

sasl.mechanism.inter.broker.protocol=GSSAPI

sasl.enabled.mechanisms=GSSAPI

sasl.kerberos.service.name=kafka

config/kraft/kafka-jaas.conf

KafkaServer {

com.sun.security.auth.module.Krb5LoginModule required

serviceName="kafka"

useKeyTab=true

storeKey=true

useTicketCache=false

//不同的主机,需修改成不同的keytab文件

keyTab="/opt/kafka/config/kafka.keytab"

// Kafka Server 在 KDC 中的用户名全称

principal="kafka/{{hostname}}@jedy.com.cn";

};

KafkaClient {

com.sun.security.auth.module.Krb5LoginModule required

serviceName="kafka"

useKeyTab=true

storeKey=true

useTicketCache=false

//不同的主机,需修改成不同的keytab文件

keyTab="/opt/kafka/config/kafka.keytab"

// Client 在KDC中的帐号

principal="kafka/{{hostname}}@jedy.com.cn";

};

Client {

com.sun.security.auth.module.Krb5LoginModule required

serviceName="zookeeper"

useKeyTab=true

storeKey=true

useTicketCache=false

//不同的主机,需修改成不同的keytab文件

keyTab="/opt/kafka/config/kafka.keytab"

// Client 在KDC中的帐号

principal="kafka/{{hostname}}@jedy.com.cn";

};

config/kraft/client-kerberos.properties

[root@hadoop01 kraft]# cat client-kerberos.properties | grep -vE '^$|^#'

bootstrap.servers=localhost:9092

group.id=test-consumer-group

security.protocol=SASL_PLAINTEXT

sasl.mechanism=GSSAPI

sasl.kerberos.service.name=kafka

修改bin文件

bin/kafka-server-start.sh(增加)

export KAFKA_OPTS="-Dzookeeper.sasl.client=false -Dzookeeper.sasl.client.username=kafka -Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.auth.login.config=$KAFKA_HOME/config/kraft/kafka-jaas.conf"

exec $base_dir/kafka-run-class.sh $EXTRA_ARGS kafka.Kafka "$@"

bin/kafka-console-producer.sh(增加)

# kafka开启kerberos认证,为kafka服务配置认证配置文件

export KAFKA_OPTS="-Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.auth.login.config=$KAFKA_HOME/config/kraft/kafka-jaas.conf"

exec $(dirname $0)/kafka-run-class.sh kafka.tools.ConsoleConsumer "$@"

bin/kafka-topics.sh(增加)

# kafka开启kerberos认证,为kafka服务配置认证配置文件

export KAFKA_OPTS="-Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.auth.login.config=$KAFKA_HOME/config/kraft/kafka-jaas.conf"

exec $(dirname $0)/kafka-run-class.sh kafka.admin.TopicCommand "$@"

认证文件

将kafka.keytab 放到config下

下发配置和bin

[root@hadoop01 habse]# cd /opt/kafka

[root@hadoop01 kafka]#for i in {02..03}; do

scp {config/kraft/server.properties,config/kraft/server.properties,config/kraft/kafka-jaas.conf,config/kraft/client-kerberos.properties} hadoop$i:/opt/kafka/conf;

scp {bin/kafka-server-start.sh,bin/kafka-console-producer.sh,bin/kafka-topics.sh} hadoop$i:/opt/kafka/bin;

done

``

### 重启服务(hadoop01-hadoop03都执行)

#### 切换到启动用户,进行认证

```javascript

su - kafka

kinit -kt $KAFKA_HOME/config/kafka.keytab kafka/$HOSTNAME@jedy.com.cn

klist

依次重启服务

停止

sudo -u kafka bash -c 'cd /opt/kafka && bin/kafka-server-stop.sh -daemon config/kraft/server.properties'

启动

sudo -u kafka bash -c 'cd /opt/run/kafka && nohup bin/kafka-server-start.sh -daemon config/kraft/server.properties >/dev/null 2>&1 &'

验证

创建一个topic

[kafka@hadoop03 ~]$ kafka-topics.sh --bootstrap-server hadoop03.jedy.com.cn:9092 --create --topic test1 --partitions 3 --replication-factor 3 --command-config=/opt/kafka/config/kraft/client-kerberos.properties

Created topic test1.

查看topic

[kafka@hadoop03 kafka]$ kafka-topics.sh --bootstrap-server hadoop03.jedy.com.cn:9092 --list --command-config=/opt/kafka/config/kraft/client-kerberos.properties

创建一个生产者

[kafka@hadoop03 ~]$ kafka-console-producer.sh --broker-list hadoop03.jedy.com.cn:9092 --topic test1 --producer.config=/opt/kafka/config/kraft/client-kerberos.properties

创建一个消费者

[kafka@hadoop03 ~]$ kafka-console-consumer.sh --bootstrap-server hadoop03.jedy.com.cn:9092 --topic test1 --consumer.config=/opt/kafka/config/kraft/client-kerberos.properties

当生产者发数据时,消费端消费数据

错误处理

报错

kafka Error creating broker listeners from 'SASL_PLAINTEXT:

原因、解决方法:

罪魁祸手因为kafka配置文件,注释必须单独一行写,否则会把注释看作是有效配置内容,导致报错!!!

最后将注释放在单独一行,成功启动kafka集群

最后

如需沟通:lita2lz

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)