大数据学习8之分布式日志收集框架Flume——Flume实战应用之将A服务器上的日志实时采集到B服务器

将A服务器上的日志实时采集到B服务器文章目录将A服务器上的日志实时采集到B服务器(1)新建服务器A的flume conf配置文件exec-memory-avro.conf(2)新建服务器B的flume conf配置文件avro-memory-logger.conf(3)先启动服务器B的flume agent avro-memory-logger(4)在启动服务器A的flume agent exec

·

将A服务器上的日志实时采集到B服务器

文章目录

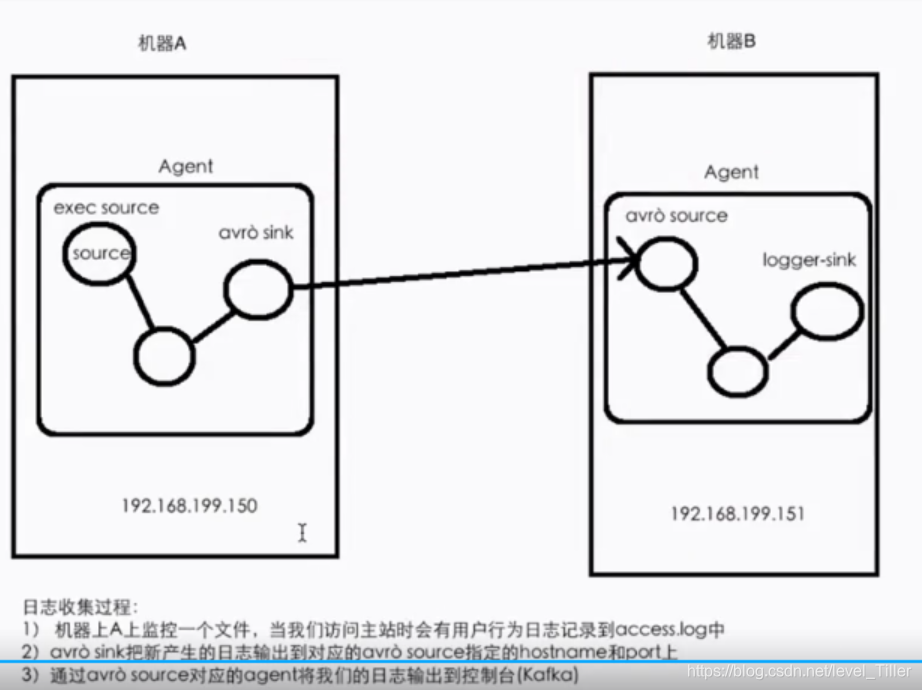

跨节点进行传输一般采用avro sink

技术选型:

服务器A:

exec source+memory channel + avro sink

服务器B:

avro source+memory channel+logger sink

(1)新建服务器A的flume conf配置文件exec-memory-avro.conf

exec-memory-avro.sources = exec-source

exec-memory-avro.sinks = avro-sink

exec-memory-avro.channels = memory-channel

exec-memory-avro.sources.exec-source.type = exec

exec-memory-avro.sources.exec-source.command = tail -F /home/hadoop/data/data.log

exec-memory-avro.sources.exec-source.shell = /bin/sh -c

exec-memory-avro.sinks.avro-sink.type = avro

exec-memory-avro.sinks.avro-sink.hostname = hadoop000

exec-memory-avro.sinks.avro-sink.port = 44444

exec-memory-avro.channels.memory-channel.type = memory

exec-memory-avro.sources.exec-source.channels = memory-chann

(2)新建服务器B的flume conf配置文件avro-memory-logger.conf

avro-memory-logger.sources = avro-source

avro-memory-logger.sinks = logger-sink

avro-memory-logger.channels = memory-channel

avro-memory-logger.sources.avro-source.type = avro

avro-memory-logger.sources.avro-source.bind = hadoop000

avro-memory-logger.sources.avro-source.port = 44444

avro-memory-logger.sinks.logger-sink.type = logger

avro-memory-logger.channels.memory-channel.type = memory

avro-memory-logger.sources.avro-source.channels = memory-channel

avro-memory-logger.sinks.logger-sink.channel = memory-channel

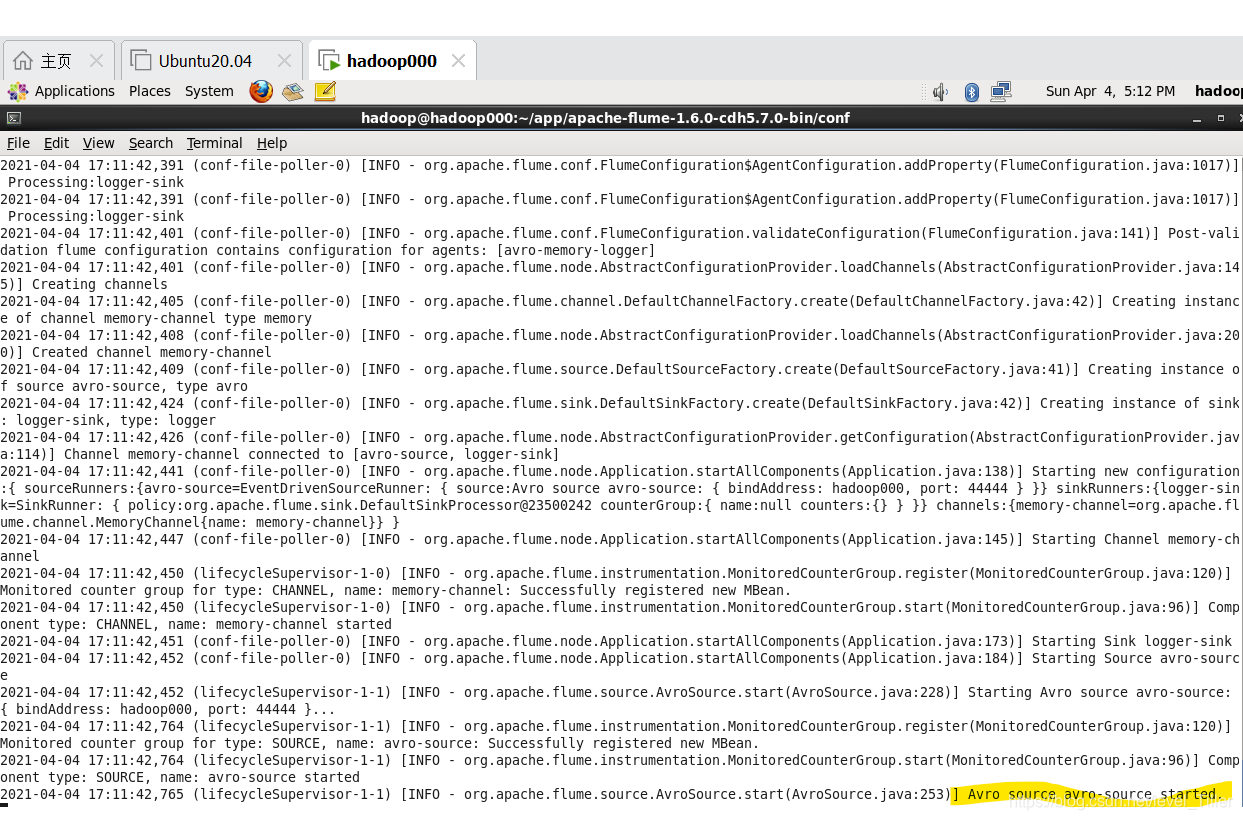

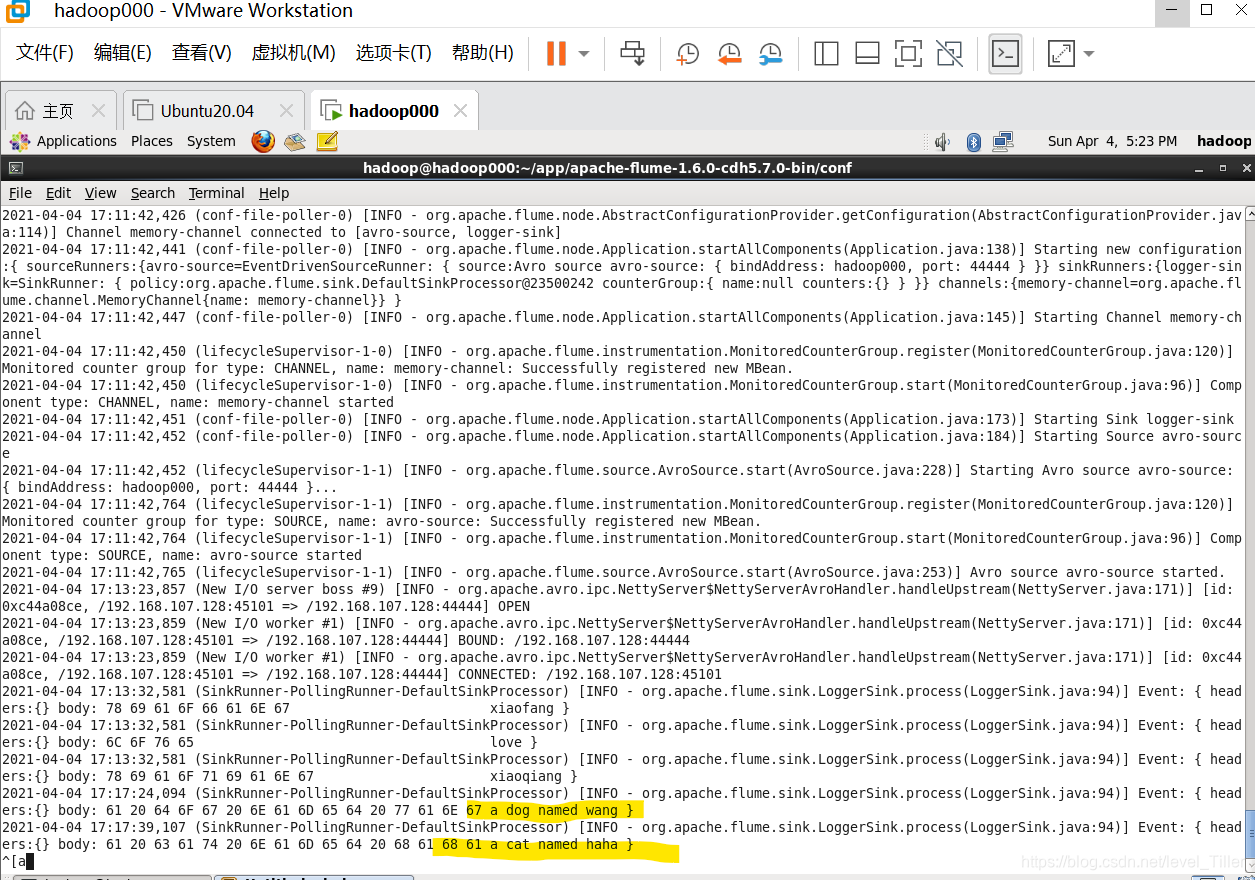

(3)先启动服务器B的flume agent avro-memory-logger

flume-ng agent \

--name avro-memory-logger \

--conf $FLUME_HOME/conf \

--conf-file $FLUME_HOME/conf/avro-memory-logger.conf \

-Dflume.root.logger=INFO,console

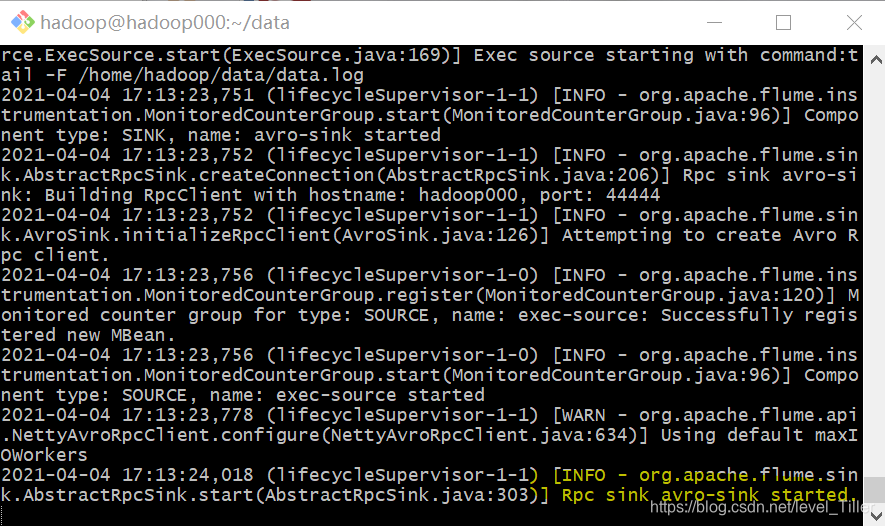

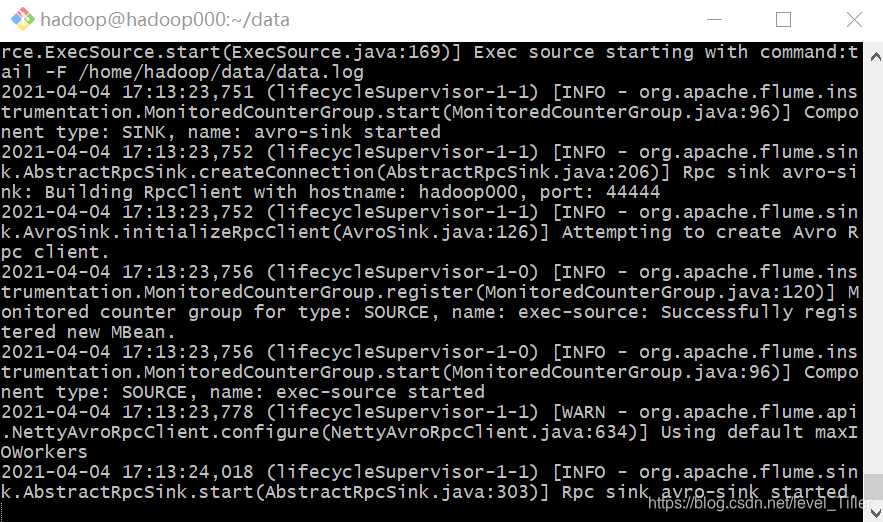

(4)在启动服务器A的flume agent exec-memory-avro

flume-ng agent \

--name exec-memory-avro \

--conf $FLUME_HOME/conf \

--conf-file $FLUME_HOME/conf/exec-memory-avro.conf \

-Dflume.root.logger=INFO,console

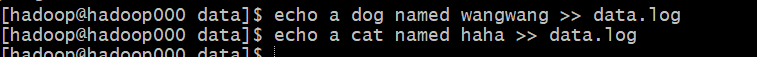

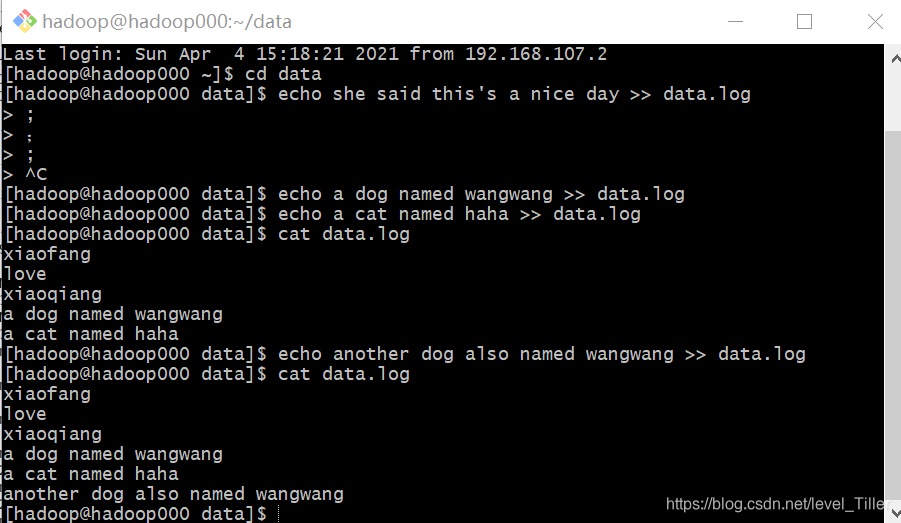

(5)测试

服务器A追加日志信息到监控文件中

服务器A中没有打印日志信息到控制台上,

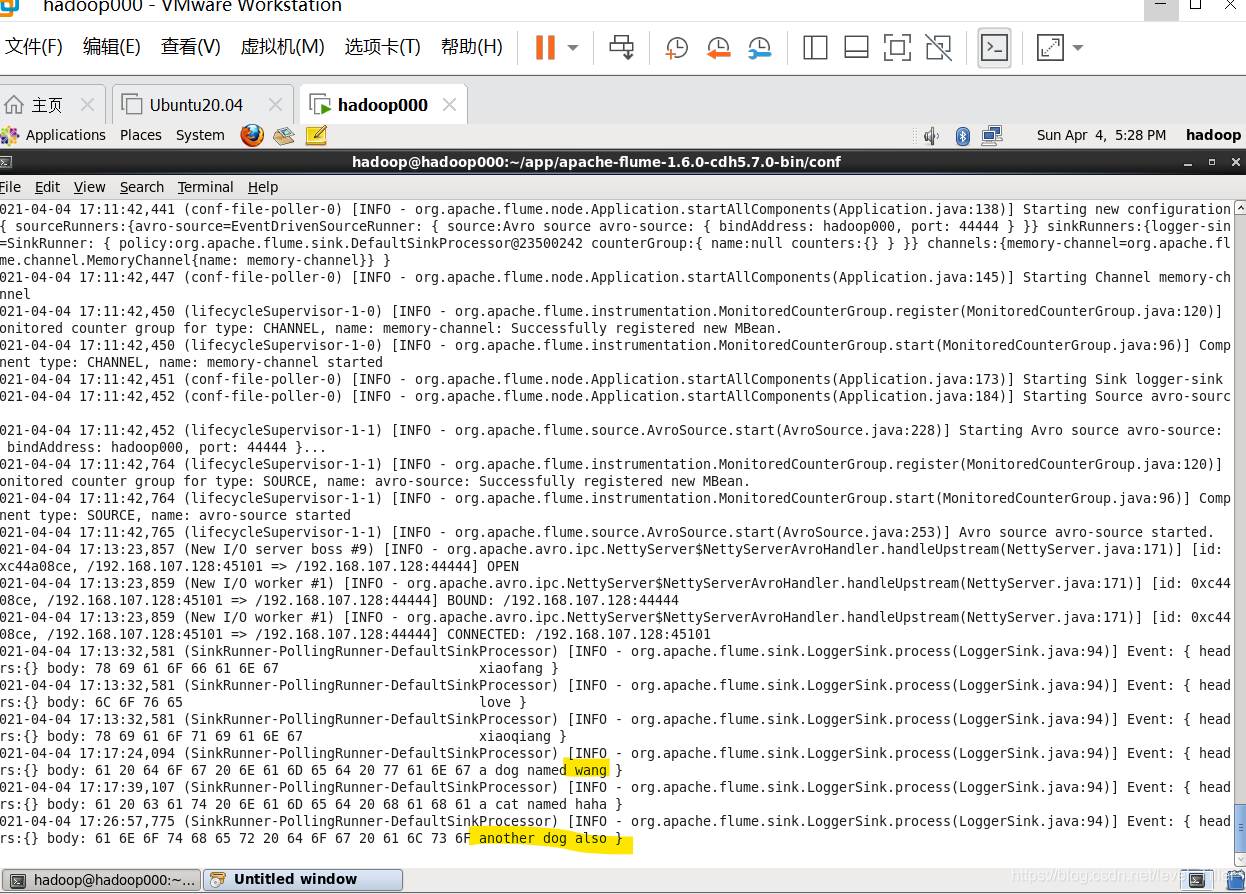

服务器B将日志信息打印到控制台上(Logger sink)

问题:

可以看到日志内容出现了丢失

解决:

可以看到Logger Sink中属性maxBytesToLog要记录的事件体的最大字节数默认为16

更多推荐

已为社区贡献6条内容

已为社区贡献6条内容

所有评论(0)