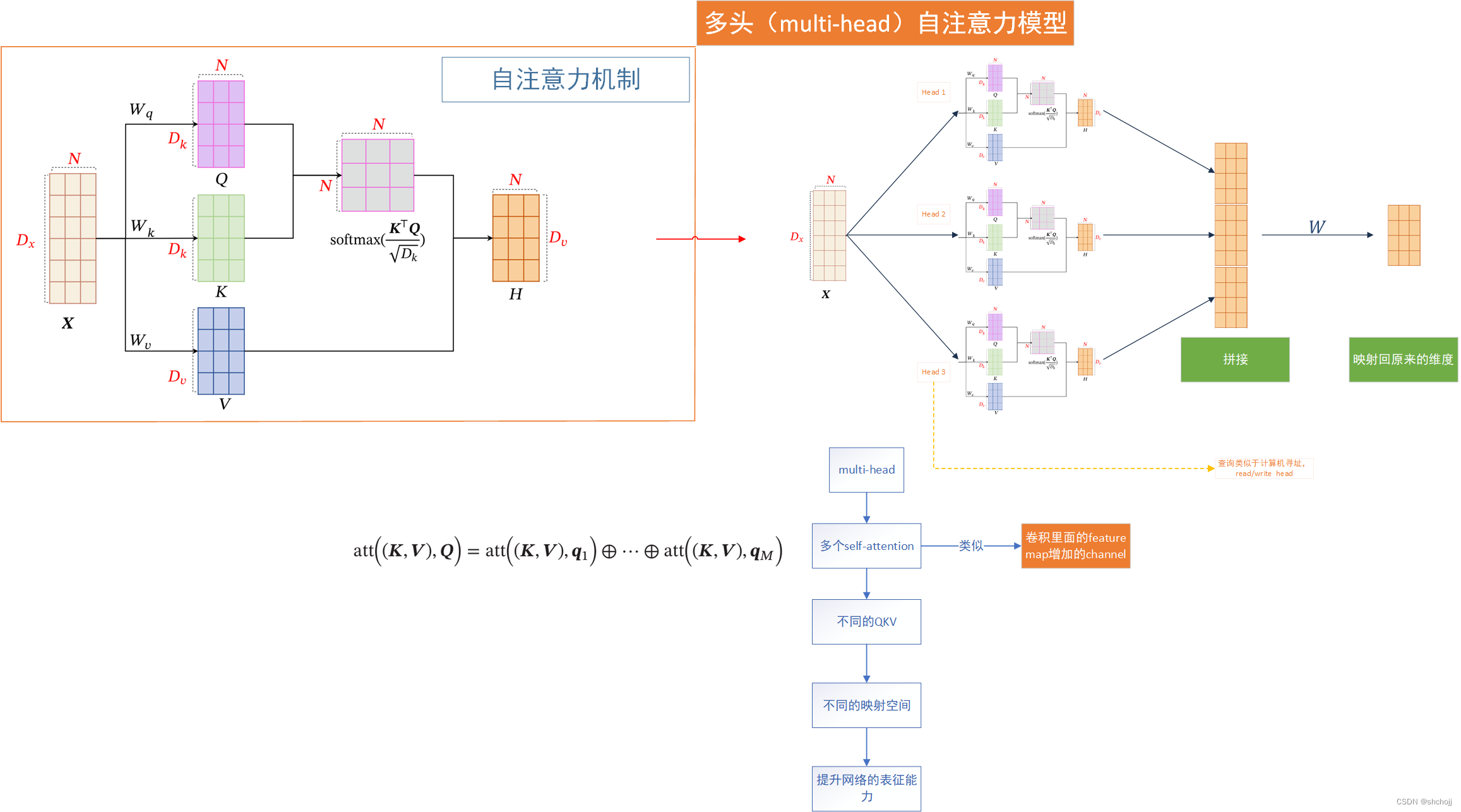

transformer(三)多头自注意力

https://www.bilibili.com/video/BV1sU4y1G7CN?spm_id_from=333.880.my_history.page.clickhttps://nndl.github.io/https://nndl.github.io/ppt/chap-%E6%B3%A8%E6%84%8F%E5%8A%9B%E6%9C%BA%E5%88%B6%E4%B8%8E%E5%A4

https://www.bilibili.com/video/BV1sU4y1G7CN?spm_id_from=333.880.my_history.page.click

https://nndl.github.io/

https://nndl.github.io/ppt/chap-%E6%B3%A8%E6%84%8F%E5%8A%9B%E6%9C%BA%E5%88%B6%E4%B8%8E%E5%A4%96%E9%83%A8%E8%AE%B0%E5%BF%86.pptx

https://zhuanlan.zhihu.com/p/410776234

https://zhuanlan.zhihu.com/p/383675526

https://arxiv.org/pdf/2106.04554.pdf

https://blog.eson.org/pub/664e9bad/

http://speech.ee.ntu.edu.tw/~tlkagk/courses/ML_2019/Lecture/Transformer%20(v5).pdf

https://github.com/luweiagi/machine-learning-notes/blob/master/docs/natural-language-processing/self-attention-and-transformer/attention-is-all-you-need/attention-is-all-you-need.md

https://zhuanlan.zhihu.com/p/264749298

更多推荐

已为社区贡献11条内容

已为社区贡献11条内容

所有评论(0)