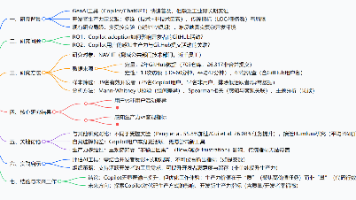

深度学习用于文本分类--文本向量化

1、keras实现单词级的one-hot编码from keras.preprocessing.text import Tokenizersamples = ['you got a dream,you got to protect it','everything that has a beginning,has an end']tokenizer = Tokenizer(num_words=100)

·

1、keras实现单词级的one-hot编码

from keras.preprocessing.text import Tokenizer

samples = ['you got a dream,you got to protect it','everything that has a beginning,has an end']

tokenizer = Tokenizer(num_words=100) ##创建一个分词器,参数为设置前100个最常见的单词

tokenizer.fit_on_texts(samples) #构建单词索引

sequence = tokenizer.texts_to_sequences(samples) #将字符串转换为整数索引组成的列表

one_hot_results = tokenizer.texts_to_matrix(samples,mode='binary')

word_index = tokenizer.word_index #找回单词索引

print('found %s unique tokens.'%len(word_index))

#found 13 unique tokens.2、词嵌入

用 Embedding 层学习词嵌入

from keras.layers import Embedding

embedding_layers = Embedding(input_dim,output_dim) #参数分别为最大单词索引+1,嵌入维度Embedding 层输入的是二维整数张量,返回一个三维浮点数张量

使用预训练的词嵌入(以Glove词嵌入为例)

##解析Glove词嵌入文件

glove_dir = 'Download/glove.6B'

embedding_index={}

f = open(od.path.join(glove_dir,'glove.6B.100d.txt')

for line in f:

values = line.split()

word = values[0]

coefs = np.asarray(values[1:],dtype='float32')

embedding_index[word]=coefs

f.close()

print('found %s word vectors.'% len(embedding_index)

##Glove词嵌入矩阵

embedding_dim = 100

embedding_matrix = np.zeros((max_words,embedding_dim))

for word,i in word_index.items():

if i < max_words:

embedding_vector = embedding_index.get(word)

if embedding_vector is not None:

embedding_matrix[i]=embedding_vector

##根据自己需求定义模型

from keras.models import Sequential

from keras.layers import Embedding, Flatten, Dense

model = Sequential()

model.add(Embedding(max_words, embedding_dim, input_length=maxlen))

model.add(Flatten())

model.add(Dense(16, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

model.summary()

#模型中加入词嵌入

model.layers[0].set_weights([embedding_matrix])

model.layers[0].trainable = False #需要冻结 Embedding 层更多推荐

已为社区贡献6条内容

已为社区贡献6条内容

所有评论(0)