基于minio纯前端上传,以及大文件分片上传

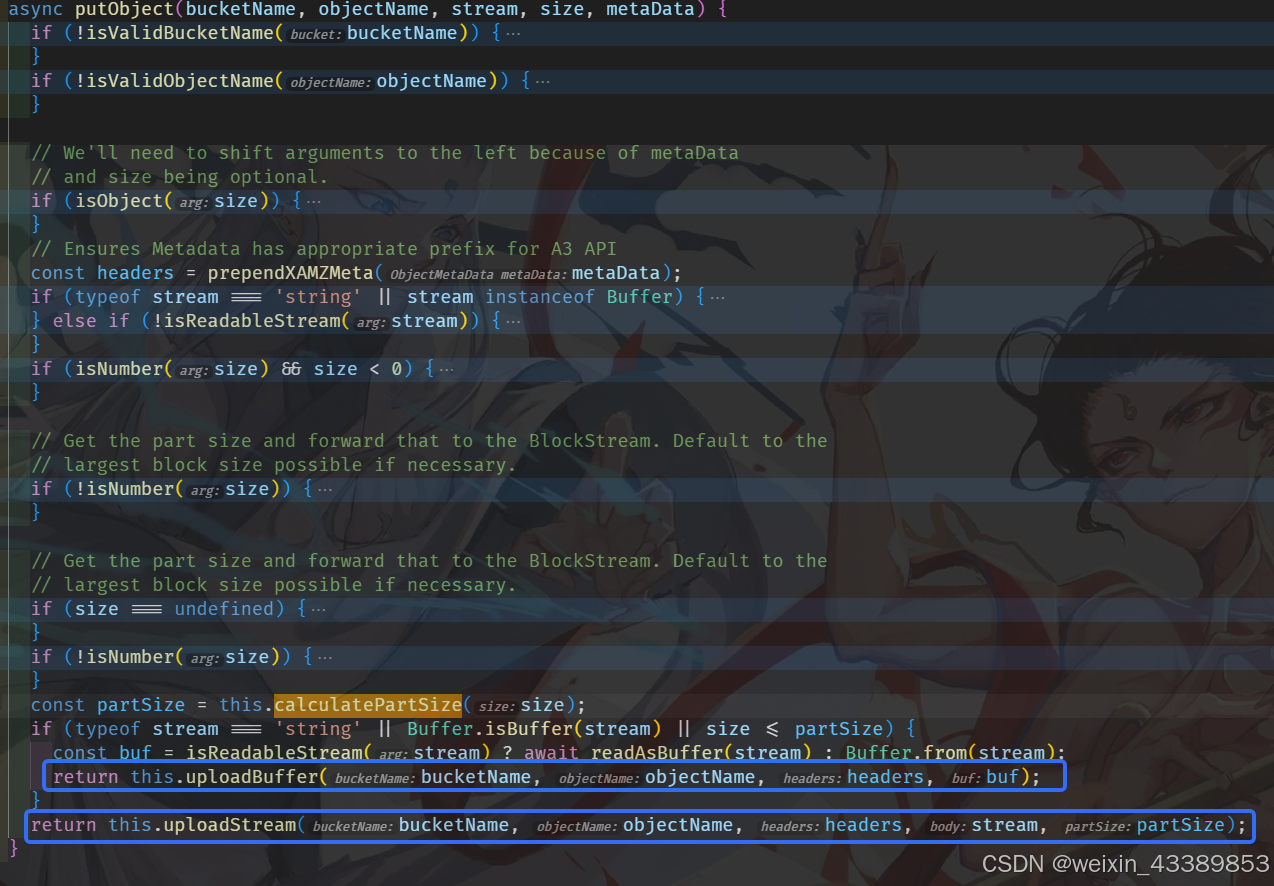

主包发现这里面其实代码里面putObject特地是实现了直传和分片上传的逻辑的啦,如果文件过小,就是64Mb以下,就按照直传的方式,如果是64MB以上,是自动按照分片模式上传,当然如果有宝愿意把这个翻译成js写入自己的代码,也是可以的,此外,这个最多上传5G的文件,超过这个就会报上传错误的啦。最后就到了我们激动人心的时刻了,在你需要的地方引入我们的minio-js(当然这个官方提供的minio是n

1.前沿

看了网上众多复杂的案例,但是又基于公司紧缺的服务器带宽,资金紧缺等,不得已只能说让前端直接实现纯前端minio上传,大文件上传,但是此案例只适用于5GB以下的文件才可以,(不过一般应该没有小朋友需要上传那么大的文件需求了)

2.具体实现

import { Minio } from "minio-js";需要安装的版本

"buffer": "^6.0.3",

"minio-js": "^1.0.7",

"readable-stream": "^4.7.0"

我目前用的这个,当然运行下面命令即可

npm install buffer minio-js readable-stream然后可以在main.js里面进行全局挂载

import { Buffer } from "buffer";

import { Readable as NodeReadable } from "readable-stream";

window.Buffer = Buffer; // 挂载到全局

window.NodeReadable = NodeReadable;最后就到了我们激动人心的时刻了,在你需要的地方引入我们的minio-js(当然这个官方提供的minio是nodejs环境无法在浏览器里使用这边就不做示范了)

import { Minio } from "minio-js";连接你的minio

const MinioClient = new Minio.Client({

endPoint: endPoint,

port:port,

accessKey: accessKey,

secretKey: secretKey,

sessionToken: sessionToken,

useSSL: false,

});这些根据你的需要,写适合的,这边我是直接用后端接口调用直接放进去了

下面这个是签名版直传方案(当然这个不支持分片哦,只能说可以使用file直传)

// 预签名版直传方案

const url = await MinioClient.presignedPutObject(

bucket,

file.name`,

3600,

);

fetch(url, {

mode: "cors", // 解决跨域

headers: {

// Accept:

"Content-Type": "multipart/form-data",

"Content-Disposition": "form-data",

},

method: "PUT",

body: params.file, //data就是文件对象

}).then((response) => {

console.log(response, "response");

if (response.ok) {

// 处理成功的情况

console.log(`${params.file.name} 上传成功`, response);

} else {

// 处理失败的情况

console.log(`${params.file.name} "上传失败,请重新上传!"`, response);

}

});这个是支持分片上传

const MinioClient = new Minio.Client({

endPoint: endPoint,

port: +port,

accessKey: accessKey,

secretKey: secretKey,

sessionToken: sessionToken,

useSSL: false,

});

const nodeStream = await fileToNodeStream(params.file);

try {

const metaData = {

"Content-Type": params.file.type,

};

// 发送上传文件的请求

let res = await MinioClient.putObject(

bucket, // 桶名称

file.name,

nodeStream,

metaData, // 元数据

);

} catch (error) {

console.error("上传失败:", error);

}async function fileToNodeStream(file, chunkSize = 3 * 1024 * 1024) {

const browserStream = file.stream();

const reader = browserStream.getReader();

return new NodeReadable({

async read(size) {

try {

const { done, value } = await reader.read();

if (done) {

this.push(null);

return;

}

// 将浏览器流的数据块拆分成更小的块

for (let i = 0; i < value.length; i += chunkSize) {

const smallChunk = value.subarray(i, i + chunkSize);

if (!this.push(Buffer.from(smallChunk))) {

// 缓冲区已满,暂停直到下次调用read()

return;

}

}

} catch (err) {

this.destroy(err);

}

},

});

}为什么上面的代码是可以实现分片的呢,主包我特地去源码里面找到了这个

/**

* upload stream with MultipartUpload

* @private

*/

async uploadStream(bucketName, objectName, headers, body, partSize) {

// A map of the previously uploaded chunks, for resuming a file upload. This

// will be null if we aren't resuming an upload.

const oldParts = {};

// Keep track of the etags for aggregating the chunks together later. Each

// etag represents a single chunk of the file.

const eTags = [];

const previousUploadId = await this.findUploadId(bucketName, objectName);

let uploadId;

if (!previousUploadId) {

uploadId = await this.initiateNewMultipartUpload(bucketName, objectName, headers);

} else {

uploadId = previousUploadId;

const oldTags = await this.listParts(bucketName, objectName, previousUploadId);

oldTags.forEach(e => {

oldTags[e.part] = e;

});

}

const chunkier = new BlockStream2({

size: partSize,

zeroPadding: false

});

// eslint-disable-next-line @typescript-eslint/no-unused-vars

const [_, o] = await Promise.all([new Promise((resolve, reject) => {

body.pipe(chunkier).on('error', reject);

chunkier.on('end', resolve).on('error', reject);

}), (async () => {

let partNumber = 1;

for await (const chunk of chunkier) {

const md5 = crypto.createHash('md5').update(chunk).digest();

const oldPart = oldParts[partNumber];

if (oldPart) {

if (oldPart.etag === md5.toString('hex')) {

eTags.push({

part: partNumber,

etag: oldPart.etag

});

partNumber++;

continue;

}

}

partNumber++;

// now start to upload missing part

const options = {

method: 'PUT',

query: qs.stringify({

partNumber,

uploadId

}),

headers: {

'Content-Length': chunk.length,

'Content-MD5': md5.toString('base64')

},

bucketName,

objectName

};

const response = await this.makeRequestAsyncOmit(options, chunk);

let etag = response.headers.etag;

if (etag) {

etag = etag.replace(/^"/, '').replace(/"$/, '');

} else {

etag = '';

}

eTags.push({

part: partNumber,

etag

});

}

return await this.completeMultipartUpload(bucketName, objectName, uploadId, eTags);

})()]);

return o;

}主包发现这里面其实代码里面putObject特地是实现了直传和分片上传的逻辑的啦,如果文件过小,就是64Mb以下,就按照直传的方式,如果是64MB以上,是自动按照分片模式上传,当然如果有宝愿意把这个翻译成js写入自己的代码,也是可以的,此外,这个最多上传5G的文件,超过这个就会报上传错误的啦

这样就完结撒花啦~~

总结,就是把file文件转成前端流就可以解决啦,降低服务器的压力

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)