毕设季的深夜:当我完成基于大数据的优衣库销售数据分析系统那一刻,所有熬夜都值得了

基于大数据的优衣库销售数据分析系统

一、个人简介

💖💖作者:计算机编程果茶熊

💙💙个人简介:曾长期从事计算机专业培训教学,担任过编程老师,同时本人也热爱上课教学,擅长Java、微信小程序、Python、Golang、安卓Android等多个IT方向。会做一些项目定制化开发、代码讲解、答辩教学、文档编写、也懂一些降重方面的技巧。平常喜欢分享一些自己开发中遇到的问题的解决办法,也喜欢交流技术,大家有技术代码这一块的问题可以问我!

💛💛想说的话:感谢大家的关注与支持!

💜💜

网站实战项目

安卓/小程序实战项目

大数据实战项目

计算机毕业设计选题

💕💕文末获取源码联系计算机编程果茶熊

二、系统介绍

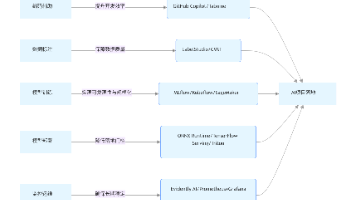

大数据框架:Hadoop+Spark(Hive需要定制修改)

开发语言:Java+Python(两个版本都支持)

数据库:MySQL

后端框架:SpringBoot(Spring+SpringMVC+Mybatis)+Django(两个版本都支持)

前端:Vue+Echarts+HTML+CSS+JavaScript+jQuery

基于大数据的优衣库销售数据分析系统是一套采用现代大数据技术栈构建的企业级数据分析平台,该系统以Hadoop分布式文件系统HDFS作为数据存储基础,结合Spark大数据处理引擎实现海量销售数据的高效计算与分析。系统后端采用Python语言开发,基于Django框架构建RESTful API服务,利用Pandas和NumPy等数据科学库进行数据清洗、预处理和统计分析,通过Spark SQL实现复杂的多维度数据查询和聚合计算。前端采用Vue.js现代化框架结合ElementUI组件库构建响应式用户界面,集成ECharts可视化图表库实现丰富的数据可视化展示效果,辅以HTML5、CSS3、JavaScript和jQuery技术确保良好的用户交互体验。系统核心功能涵盖主页分析、整体业绩分析、产品维度分析、区域与渠道分析、客户价值分析、消费模式分析、销售数据查看以及可视化大屏等八大模块,能够从多个维度深入挖掘优衣库销售数据价值,为企业决策提供科学的数据支撑。整个系统架构采用前后端分离设计,数据存储基于MySQL关系型数据库,通过大数据技术栈的有机结合,实现了从数据采集、存储、处理到可视化展示的完整数据分析流程,为服装零售行业的数字化转型提供了可靠的技术解决方案。

三、基于大数据的优衣库销售数据分析系统-视频解说

毕设季的深夜:当我完成基于大数据的优衣库销售数据分析系统那一刻,所有熬夜都值得了

四、基于大数据的优衣库销售数据分析系统-功能展示

五、基于大数据的优衣库销售数据分析系统-代码展示

# 核心功能1:整体业绩分析

def analyze_overall_performance(self):

# 使用Spark SQL查询销售总额和订单量

total_sales_query = """

SELECT

SUM(order_amount) as total_revenue,

COUNT(order_id) as total_orders,

AVG(order_amount) as avg_order_value,

COUNT(DISTINCT customer_id) as unique_customers

FROM sales_data

WHERE order_date >= date_sub(current_date(), 365)

"""

performance_data = self.spark.sql(total_sales_query)

# 计算月度增长率

monthly_growth_query = """

SELECT

DATE_FORMAT(order_date, 'yyyy-MM') as month,

SUM(order_amount) as monthly_revenue,

COUNT(order_id) as monthly_orders

FROM sales_data

WHERE order_date >= date_sub(current_date(), 365)

GROUP BY DATE_FORMAT(order_date, 'yyyy-MM')

ORDER BY month

"""

monthly_data = self.spark.sql(monthly_growth_query).collect()

growth_rates = []

for i in range(1, len(monthly_data)):

current_revenue = monthly_data[i]['monthly_revenue']

previous_revenue = monthly_data[i-1]['monthly_revenue']

growth_rate = ((current_revenue - previous_revenue) / previous_revenue) * 100

growth_rates.append({

'month': monthly_data[i]['month'],

'growth_rate': round(growth_rate, 2)

})

# 计算同比增长

year_over_year_query = """

SELECT

YEAR(order_date) as year,

SUM(order_amount) as yearly_revenue

FROM sales_data

WHERE YEAR(order_date) >= YEAR(current_date()) - 1

GROUP BY YEAR(order_date)

"""

yearly_comparison = self.spark.sql(year_over_year_query).collect()

result = {

'total_performance': performance_data.collect()[0].asDict(),

'monthly_growth': growth_rates,

'yearly_comparison': [row.asDict() for row in yearly_comparison]

}

return result

# 核心功能2:产品维度分析

def analyze_product_dimensions(self):

# 产品销量排名分析

product_ranking_query = """

SELECT

p.product_id,

p.product_name,

p.category,

p.brand,

SUM(sd.quantity) as total_quantity,

SUM(sd.order_amount) as total_revenue,

AVG(sd.unit_price) as avg_price,

COUNT(DISTINCT sd.customer_id) as unique_buyers

FROM sales_data sd

JOIN products p ON sd.product_id = p.product_id

WHERE sd.order_date >= date_sub(current_date(), 90)

GROUP BY p.product_id, p.product_name, p.category, p.brand

ORDER BY total_revenue DESC

LIMIT 50

"""

product_rankings = self.spark.sql(product_ranking_query)

# 分析产品生命周期阶段

product_lifecycle_query = """

SELECT

product_id,

DATE_FORMAT(order_date, 'yyyy-MM') as month,

SUM(quantity) as monthly_sales,

SUM(order_amount) as monthly_revenue

FROM sales_data

WHERE order_date >= date_sub(current_date(), 365)

GROUP BY product_id, DATE_FORMAT(order_date, 'yyyy-MM')

"""

lifecycle_data = self.spark.sql(product_lifecycle_query).collect()

# 使用pandas进行产品趋势分析

df_lifecycle = pd.DataFrame([row.asDict() for row in lifecycle_data])

df_lifecycle['month'] = pd.to_datetime(df_lifecycle['month'])

product_trends = {}

for product_id in df_lifecycle['product_id'].unique():

product_data = df_lifecycle[df_lifecycle['product_id'] == product_id].sort_values('month')

# 计算销量趋势(简单移动平均)

product_data['sales_trend'] = product_data['monthly_sales'].rolling(window=3).mean()

# 判断产品阶段

recent_sales = product_data['monthly_sales'].tail(3).mean()

early_sales = product_data['monthly_sales'].head(3).mean()

if recent_sales > early_sales * 1.2:

stage = "成长期"

elif recent_sales < early_sales * 0.8:

stage = "衰退期"

else:

stage = "成熟期"

product_trends[product_id] = {

'stage': stage,

'trend_data': product_data.to_dict('records')

}

# 分类销售贡献度分析

category_analysis_query = """

SELECT

p.category,

SUM(sd.order_amount) as category_revenue,

COUNT(sd.order_id) as category_orders,

AVG(sd.order_amount) as avg_order_value

FROM sales_data sd

JOIN products p ON sd.product_id = p.product_id

WHERE sd.order_date >= date_sub(current_date(), 90)

GROUP BY p.category

ORDER BY category_revenue DESC

"""

category_analysis = self.spark.sql(category_analysis_query)

return {

'product_rankings': [row.asDict() for row in product_rankings.collect()],

'product_lifecycle': product_trends,

'category_analysis': [row.asDict() for row in category_analysis.collect()]

}

# 核心功能3:客户价值分析

def analyze_customer_value(self):

# RFM模型分析 - 计算客户的最近消费时间、消费频率、消费金额

rfm_analysis_query = """

SELECT

customer_id,

DATEDIFF(current_date(), MAX(order_date)) as recency,

COUNT(order_id) as frequency,

SUM(order_amount) as monetary,

AVG(order_amount) as avg_order_value,

MAX(order_date) as last_purchase_date,

MIN(order_date) as first_purchase_date

FROM sales_data

WHERE order_date >= date_sub(current_date(), 365)

GROUP BY customer_id

HAVING COUNT(order_id) >= 1

"""

rfm_data = self.spark.sql(rfm_analysis_query).collect()

# 使用pandas进行RFM分值计算

df_rfm = pd.DataFrame([row.asDict() for row in rfm_data])

# 计算RFM分值(1-5分)

df_rfm['R_score'] = pd.qcut(df_rfm['recency'].rank(method='first'), 5, labels=[5,4,3,2,1])

df_rfm['F_score'] = pd.qcut(df_rfm['frequency'].rank(method='first'), 5, labels=[1,2,3,4,5])

df_rfm['M_score'] = pd.qcut(df_rfm['monetary'].rank(method='first'), 5, labels=[1,2,3,4,5])

# 客户分群

def classify_customer(row):

r, f, m = int(row['R_score']), int(row['F_score']), int(row['M_score'])

if r >= 4 and f >= 4 and m >= 4:

return "冠军客户"

elif r >= 3 and f >= 3 and m >= 3:

return "忠诚客户"

elif r >= 4 and f <= 2 and m <= 2:

return "新客户"

elif r <= 2 and f >= 3 and m >= 3:

return "重要挽回客户"

elif r <= 2 and f <= 2:

return "流失客户"

else:

return "普通客户"

df_rfm['customer_segment'] = df_rfm.apply(classify_customer, axis=1)

# 计算客户生命周期价值

df_rfm['customer_lifespan'] = (pd.to_datetime(df_rfm['last_purchase_date']) -

pd.to_datetime(df_rfm['first_purchase_date'])).dt.days

df_rfm['purchase_interval'] = df_rfm['customer_lifespan'] / df_rfm['frequency']

df_rfm['predicted_clv'] = (df_rfm['avg_order_value'] * df_rfm['frequency'] *

(df_rfm['customer_lifespan'] / 365) * 1.2) # 预测未来价值

# 客户分群统计

segment_stats = df_rfm.groupby('customer_segment').agg({

'customer_id': 'count',

'monetary': ['mean', 'sum'],

'frequency': 'mean',

'recency': 'mean',

'predicted_clv': 'mean'

}).round(2)

# 高价值客户特征分析

high_value_customers = df_rfm[df_rfm['customer_segment'].isin(['冠军客户', '忠诚客户'])]

# 客户消费行为分析

behavior_analysis_query = """

SELECT

sd.customer_id,

HOUR(sd.order_time) as purchase_hour,

DAYOFWEEK(sd.order_date) as purchase_day,

p.category as preferred_category,

COUNT(*) as purchase_count

FROM sales_data sd

JOIN products p ON sd.product_id = p.product_id

WHERE sd.customer_id IN ({})

GROUP BY sd.customer_id, HOUR(sd.order_time), DAYOFWEEK(sd.order_date), p.category

""".format(','.join([str(x) for x in high_value_customers['customer_id'].head(100).tolist()]))

behavior_data = self.spark.sql(behavior_analysis_query).collect() if len(high_value_customers) > 0 else []

return {

'rfm_analysis': df_rfm.to_dict('records'),

'segment_statistics': segment_stats.to_dict(),

'high_value_customers': high_value_customers.head(50).to_dict('records'),

'behavior_patterns': [row.asDict() for row in behavior_data]

}

六、基于大数据的优衣库销售数据分析系统-文档展示

七、END

💕💕文末获取源码联系计算机编程果茶熊

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)